Curing My Personal Ignorance, One Day at a Time

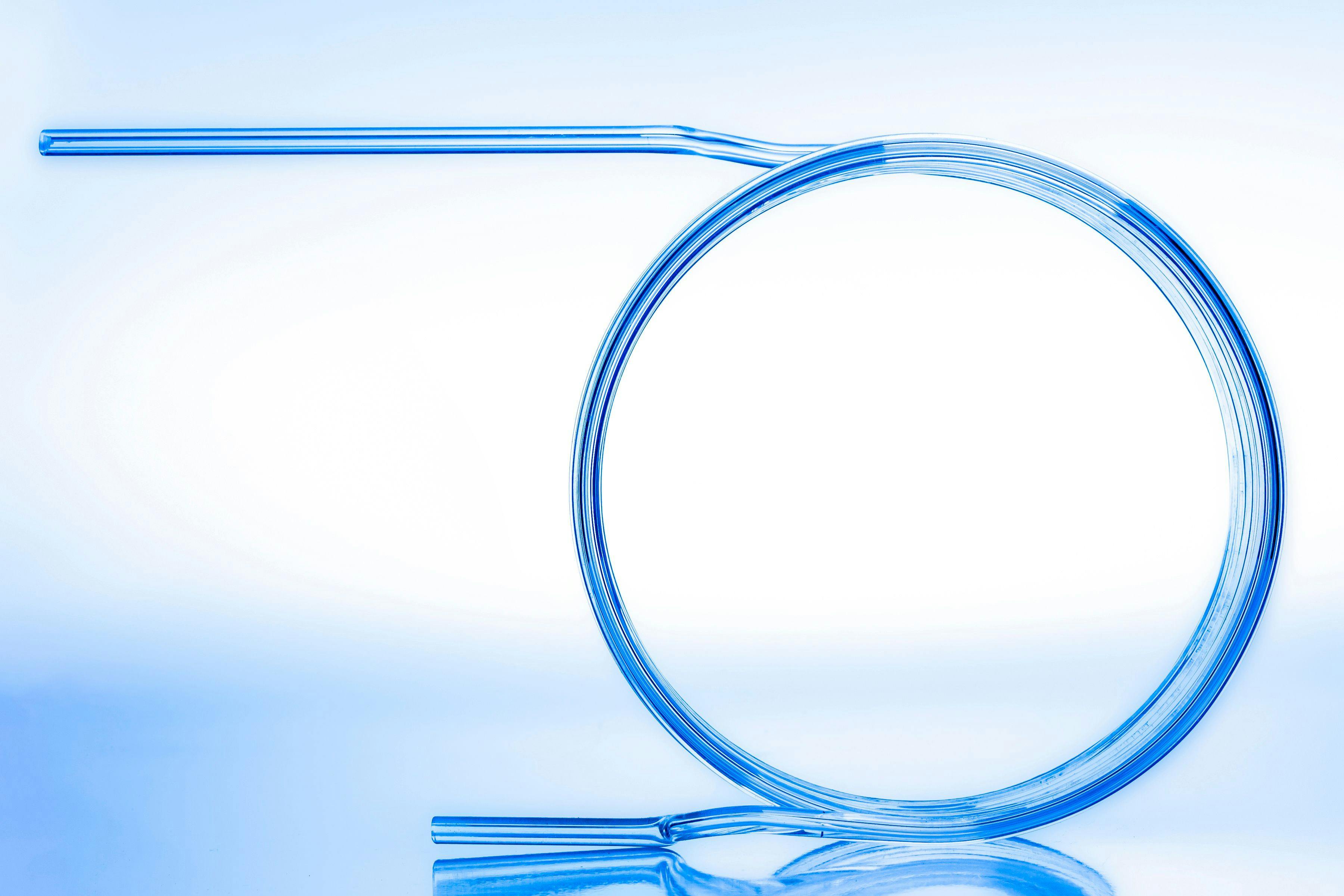

Separation science within microfluidics has already begun to make significant impact across a number of fields, but often microfluidics are embedded within a larger system — their importance hidden or minimized.

The tiny length scale of microfluidics enables things to happen that have no equivalent on the benchtop. For example, very large electric fields and gradients may be induced that are impossible on the macroscale. When resolution is coupled to the value of these elements, it can skyrocket. If the details of the techniques naturally lend themselves to multi-step separations or enhance detector function, the impact from the advanced resolution is multiplied.

I began to wonder, “By how much?” Can I estimate how much better the separations can be, and how much better the attached mass spectrometry (MS), cyroelectron microscopy (cryoEM), spectrophotometry, or nuclear magnetic resonance (NMR) might work? What is the true “impact?” If I want to answer such a question, what are my metrics? What do I put on the x- and y-axes when I go to plot it? “Impact” didn’t seem to work well as an analytic unit of measure.

I can estimate peak capacity (the number of separate pieces of information that can be gathered), coupled to the resolution and the maximum and minimum elution time or spatial elements. Microfluidics can provide ten to a thousand times better peak capacity on a single axis. But how can you understand the impact of such a thing? Cue my ignorance of entire fields of science.

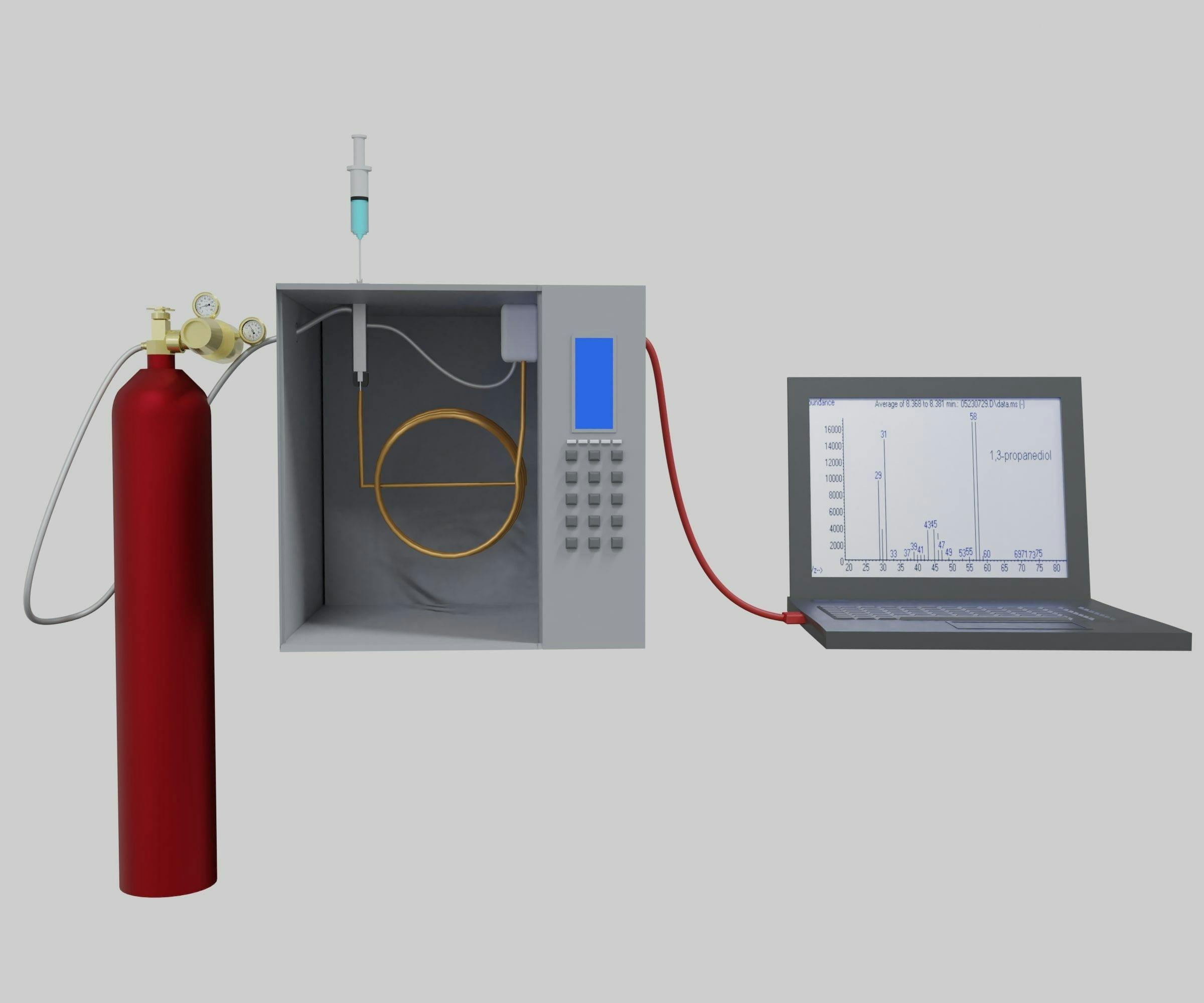

Like most things in my career, someone else has been thinking about this stuff for a long time, and I just didn’t know about it (and, of course, they did not use any words I would search for, or understand, initially). Some lucky wandering through some old gas chromatography (GC) and MS literature from the 1960s and 1970s, along with a friendship with a mathematician, were the key to a new world for me: information theory.

They have been at this a long time; the original driver was (as I understand it) to estimate the amount of information that could be pushed through the trans-Atlantic cable. To understand impact of an analytical technique, I needed to be looking to boats, cables, oceans, and electronics? Obvious, right?

Buried within their analysis was a formula for the smallest “size” (bit) of information compared to the total width of that information, which in our world is peak capacity. Furthermore, the amplitude range can be similarly quantified for “information content” or bits. Adapted to separations science, this can give the number of available “bits” of information from that system. Cue more ignorance.

Turns out spectroscopists challenged with similar problems attacked them with concepts consistent with Shannon’s equation. Kaiser wrote two articles in 1970 (Anal. Chem. 42, 24A–41A, 26A–59A) that explains these concepts applied to identifying and quantifying all of the elements in lunar rock samples and defines the “informing power” of an overall technique (in available bits) and “informing volume” of that technique as applied to a particular problem. Importantly, also defined is the volume of information needed from the sample or problem posed.

Now I have the tools to estimate and compare the informing power of a separations science technique in terms of the available information in bits. I also have a construct to estimate the volume of the information needed to attack a particular problem.

On this second point is an interesting question we are playing with: How much information is contained in blood? It sounds ludicrous to ask such a question. But with these strategies enumerated by information theory, this can be considered. Think of cells, small bioparticles, proteins, peptides, small molecules, and organic and inorganic ions; set maximum/minimum concentrations and the smallest clinically relevant or biologically important difference in concentration, and you have it. It’s a huge number that we are still refining, but for argument’s sake, let’s set it at 1022 bits. Is it feasible to create analytical techniques to match this information volume? When you start running through the numbers and the math, the simple answer is yes, but the keys are microfluidics and the multiplicative factors of having multiple axes and the amplification of the information-rich detection schemes. When we are multiplying exponents, they grow quickly. Coupled with this outrageous claim (I just said you can get 1022 bits of information from blood; didn’t notice, did you?), the various axes of separation and detection must be compatible in time, space, configuration, and concentration.

We are currently writing an academic paper in this space. Although we are off a bit in detail here and there, the numbers are so large that it won’t change the conclusion. When separations science is fully exploited and coupled with information-rich detectors, the amount of information available will challenge data storage and computational systems. The bottleneck won’t be the analytics anymore.

On to my next ignorance.

Mark A. Hayes is a Professor at the School of Molecular Sciences at Arizona State University. Direct correspondence to: MHayes@asu.edu

Troubleshooting Everywhere! An Assortment of Topics from Pittcon 2025

April 5th 2025In this installment of “LC Troubleshooting,” Dwight Stoll touches on highlights from Pittcon 2025 talks, as well as troubleshooting advice distilled from a lifetime of work in separation science by LCGC Award winner Christopher Pohl.

Study Examines Impact of Zwitterionic Liquid Structures on Volatile Carboxylic Acid Separation in GC

March 28th 2025Iowa State University researchers evaluated imidazolium-based ZILs with sulfonate and triflimide anions to understand the influence of ZILs’ chemical structures on polar analyte separation.