Using the Three Cs of Data Visualization as a Life Raft When You’re Drowning in Multidimensional Chromatography Peaks

Comprehensive two-dimensional gas chromatography (GC×GC) is being utilized with increasing frequency for untargeted metabolomics analysis, especially for volatile metabolites, which has enabled the rapid expansion of volatile metabolite catalogs for microbes, plants, animals, and humans. The ability to detect hundreds to thousands of volatile metabolites in a single sample also makes GC×GC a powerful tool for the volatile biomarker discovery through the comparative analysis of sample groups (such as for disease and control). However, the goal of turning the chromatographic data into biological information is rarely straightforward because issues, such as missing data, sparse matrices, high sample group variance, and too few samples relative to the number of chemical features, can confound statistical approaches for biomarker identification. Applying correlation, clustering, and color to exploit the ability of the brain to identify patterns from huge amounts of visual information can provide a life raft for a weary chromatographer who went from swimming in data to drowning in it.

The untargeted chemical analysis of samples with complex matrices, such as those commonly encountered in metabolomics, petroleomics, foodomics, or environmental analysis, can be an exciting exploration of uncharted waters. The goals of untargeted analyses are to explain the complete chemical profile of a sample or a system (represented by many samples), and along the way discover many true unknown compounds—or compounds that were previously undetected and unknown to exist in the system. Comprehensive two-dimensional gas chromatography (GC×GC) is an ideal analytical tool for the task because the added dimension of separation enables the detection of the true unknowns by separating them from the coeluted compounds that otherwise obscure these hidden chemical treasures (1). Pairing a GC×GC system with mass spectrometry (GC×GC–MS) or with high resolution mass spectrometry (GC×GC–HRMS) also enables the identification of the separated compounds, which is a siren song that entices curious chromatographers to wade into deep waters of chemical information. However, the delight of swimming in such rich data can quickly turn to dread. Because samples with complex matrices typically contain several hundred to several thousand detectable chemical features, the size of the data matrices that are generated from more than a few GC×GC–MS samples—or just a single sample analyzed by GC×GC–HRMS—can drown even skilled chromatographic explorers. Fortunately, data visualization methods serve as life rafts that can rescue weary scientists and save chemical expeditions.

Visualizing Untargeted Metabolomics Data: Beyond Principal Components Analysis

Untargeted GC×GC metabolomics analysis of volatile metabolites has enabled the rapid expansion of volatile metabolite catalogs for microbes, plants, animals, and humans (2). The ability to detect hundreds to thousands of volatile metabolites in a single sample also makes GC×GC a powerful tool for volatile biomarker discovery for applications, such as breath-based diagnostics, by making it possible to compare the volatilomes of disease and control groups. The crux of biomarker discovery studies is analyzing enough samples from disease cases and controls to capture the variance in each group. Because of the large amount of natural variance in biospecimens, a well-powered study usually requires many dozens to hundreds of specimens, increasing the risk of drowning in data. Even with large numbers of samples, GC×GC biomarker studies still usually have too few samples relative to the number of chemical features, and they can have other problems, such as missing or sparse data, that can confound statistical approaches for biomarker identification. Therefore, data visualization is often our first entry point into uncovering potential analytical biases and identifying the defining chemical features of our sample groups.

The goal of data visualization in multisample analyses is to capture all the data in a single graphic. This enables our brain to process that information and identify the relationships between the samples and their chemical features. There are several visualization approaches for leveraging HRMS data, such as the Kendrick mass defect and the van Krevelen plots, that have been developed and applied to great effect in geo-chemistry and petroleomics (3). However, the majority of untargeted GC×GC metabolomics studies are collected with unit mass resolution, and therefore are more reliant on chromatographic data, such as group-type analysis based on retention time patterns. The downside of this approach is that it requires a lot of time and expertise to assign the chemical classifications for the group analysis, and therefore visualization approaches that utilize group-type analysis are not our first choice.

Principal components analysis (PCA) is often the first method we apply, especially to evaluate the overall structure of our data (2). PCA is broadly used to reduce high-dimensionality GC×GC data to two or three dimensions for display. However, because our pre-processed and aligned metabolomics data matrices often contain hundreds of variables, we typically find that a two-dimensional (2D) or three-dimensional (3D) PCA is capturing less than 20% of the total variance in the system. Although this limitation can be addressed by additional supervised or unsupervised data reduction methods before PCA analysis, such as chemical group binning (4,5), such methods are time consuming. Therefore, PCA often does not get us to our data visualization goal of displaying all of the data in a single graphic, and we have begun supplementing PCA with analyses that utilize correlation and clustering, enhanced by color: the three Cs.

The Three Cs of Data Visualization

The three Cs of data visualization are correlation, clustering, and color.

Correlation

Correlation analyses provide a measure of similarity between two samples based on their chemical features, or between two features based on their measurements over multiple samples. The Pearson correlation and Euclidian distance are two commonly used measures of similarity or dissimilarity, but there are others to try, such as Spearman correlation, Manhattan distance, and Mahalanobis distance, that may be better suited to your data. As an example, in a study to investigate the relationship of metabolism to immune response, we calculated the Pearson correlations within metabolites (M), within immune markers (I), and between the two variable types, using measurements of each of the variables across 16 samples (Figure 1). We identified 23 metabolites that were significantly correlated—positively or negatively—to 26 immune markers, and displayed the degree of correlation using color.

FIGURE 1: Statistically significant Pearson correlations of 26 immune markers (I) and 23 metabolites (M) calculated using 16 observations. Positive correlations are indicated by blue and negative correlations by red, with the shade of color indicating the strength of correlation. (a) No clustering has been applied. (b) Hierarchical clustering applied using average linkage. The matrix of correlations and p-values was calculated using the rcorr function in the Hmisc package version 4.5-0. Correlation and clustering graphics were generated using the corrplot function in the corrplot package version 0.84. All analyses performed in R version 4.0.4.

Clustering

Clustering is where the magic happens, and clustering methods build off of correlation data. When you use clustering—linking samples based on their correlation—broader patterns of relatedness within your system emerge. In Figure 1a, no clustering has been applied to the metabolites and immune marker correlations (the variables are ordered in the graphic as they were listed in the data matrix). Though it is possible to evaluate the pair-wise correlations between two variables in this format, once clustering is applied (Figure 1b) systemic correlations are visible. There are a lot of clustering options to try, but I recommend starting with agglomerative hierarchical clustering analysis (HCA) using the default settings in your data analysis program of preference.

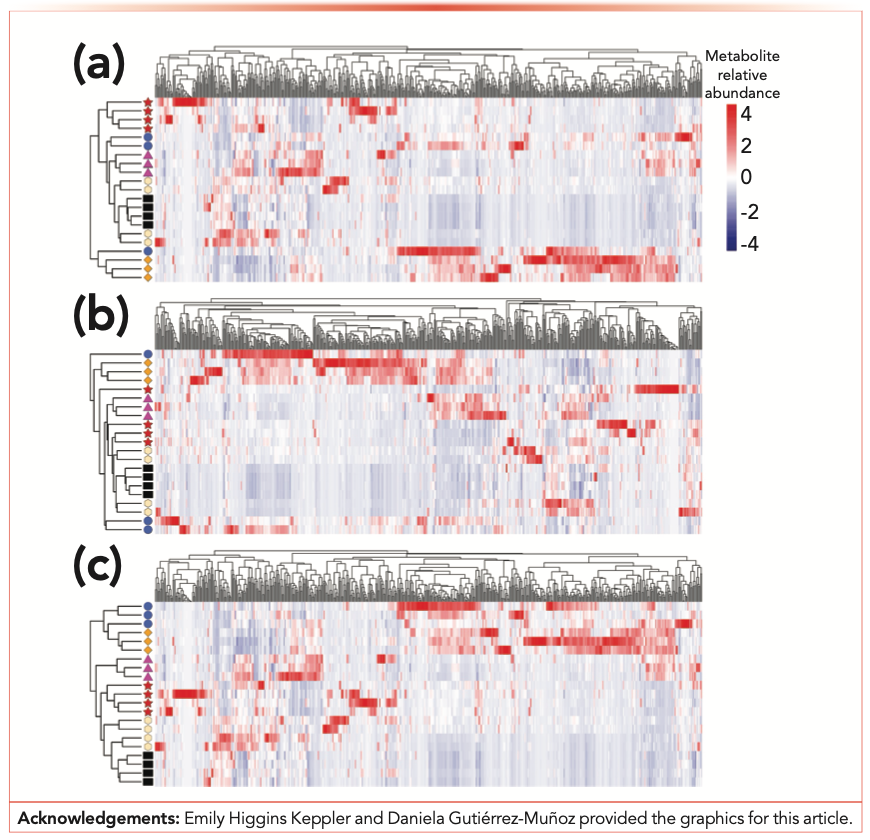

Once you are ready to explore, you can try altering the linkages, using options such as complete, minimum, or average. In Figure 2, you can see examples of how modifying combinations of correlation and linkage alter the clustering in interesting ways. In this data set, we are performing cluster analysis of both the samples, depicted in rows, and the volatile metabolites, depicted in columns. In Figure 2a, we have used the default R pheatmap clustering method and in Figures 2b or 2c we have applied alternative similarity measures or linkage methods, or both. In Figure 2c, you can see how the combination of similarity and linkage methods generated nice clustering of the replicate sample types in the rows. Additionally, combining the sample clustering with the volatile metabolites clustering and heatmap data enables the identification of sets of volatile metabolites that distinguish sample classes.

FIGURE 2: Hierarchical clustering analysis of 21 metabolomics samples, in rows with sample type indicated by colored shapes, and 561 volatile metabolites, in columns, using the pheatmap package version 1.0.12 and R version 4.0.1. Volatile metabolite relative abundances are represented in a heatmap. (a) Default row and column clustering method, which uses Euclidean distance and complete linkage. (b) Euclidean distances for rows and columns, with average linkage. (c) Pearson correlation with complete linkage for rows, Euclidean distance with complete linkage for columns.

Color

Color matters. This fact seems self-evident, but the default colors of a data analysis or data graphing program are often not the optimal choices for the clustering patterns that your specific data are generating. After you have settled on the best clustering option, try alternate color combinations to enable the viewer to interpret the data at a glance, and be open to going back to grayscale, which sometimes is the best option. Finally, check your colored graphics for color-blindness readability and make sure that all of the key pieces of information that the colors are conveying are broadly accessible (6).

Selecting the Best Life Raft for Your Chemical Expedition Occurs by Trial and Error

In the descriptions above, I referred to data analysis and visualization options that we commonly explore using R packages, such as pheatmap, factoextra, Hmisc, and corrplot, among others, but similar options will exist in Matlab, SASS, and other programs. The key message is that once you get the hang of using the default settings for correlation, clustering, and color, you can start exploring the other options built into the program; don’t expect that one-size-fits-all of your data. You may find that Euclidian distance and average linkage provide beautiful hierarchical clustering for one study, but for another (ostensibly similar) data set those methods look like a mess, while Pearson correlation and complete linkage generates meaningful results. It takes some trial and error to find the right combination of the three Cs for each study. Whatever you settle on, make sure you report the correlation and clustering methods when you publish your data (7)!

References

(1) Z. Liu and J.B. Phillips, J. Chromatogr. Sci. 29, 227–231 (1991). DOI:10.1093/chromsci/29.6.227

(2) E.A. Higgins Keppler, C.L. Jenkins, T.J. Davis, and H.D. Bean, Trends Anal. Chem. 109, 275–286 (2018). DOI:10.1016/j.trac.2018.10.015

(3) S. Gutiérrez Sama, M. Farenc, C. Barrère-Mangote, R. Lobinski, C. Afonso, B. Bouyssière, and P. Giusti, Energy & Fuels 32, 4593–4605 (2018). DOI:10.1021/acs.energyfuels.7b03218

(4) A. Hahn, K. Whiteson, T.J. Davis, J. Phan, I. Sami, A. Koumbourlis, R. Freishtat, K. Crandall, and H.D. Bean, Front. Cell. Infect. Microbiol. 10, 174 (2020). DOI:10.3389/fcimb.2020.00174

(5) B.A. Weggler, B. Gruber, and F.L. Dorman, Anal. Chem. 91, 10949–10954 (2019). DOI:10.1021/acs.analchem.9b01750

(6) A. Duner, Three Tools to Help You Make Colorblind-Friendly Graphics (2016). https://knightlab.northwest- ern.edu/2016/07/18/three-tools-to- help-you-make-colorblind-friendly-graphics/.

(7) H.D. Bean, The Analytical Scientist 82, 14–15 (2019).

Heather Bean is an Assistant Professor at the School of Life Sciences at Arizona State University, in Tempe, Arizona. Direct correspondence to: heather.d.bean@asu.edu.

Study Examines Impact of Zwitterionic Liquid Structures on Volatile Carboxylic Acid Separation in GC

March 28th 2025Iowa State University researchers evaluated imidazolium-based ZILs with sulfonate and triflimide anions to understand the influence of ZILs’ chemical structures on polar analyte separation.

Quantifying Microplastics in Meconium Samples Using Pyrolysis–GC-MS

March 26th 2025Using pyrolysis-gas chromatography and mass spectrometry, scientists from Fudan University and the Putuo District Center for Disease Control and Prevention detected and quantified microplastics in newborn stool samples.