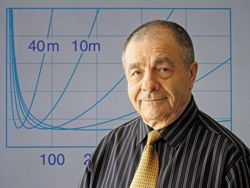

Constantly Evolving

Leon Blumberg has been at the cutting edge of chromatography since the late 1980s. The Column spoke with him about his contribution to the past, present, and future of chromatography.

Photo Credit: simon2579/Getty Images

Leon Blumberg has been at the cutting edge of chromatography since the late 1980s. The Column spoke with him about his contribution to the past, present, and future of chromatography.

- Interview by Lewis Botcherby

Q. You began your career as an electrical engineer. How did you come to work within the field of analytical chemistry and more specifically gas chromatography?

A: I joined the Avondale Division of Hewlett-Packard Co, which is now Agilent Technologies, in 1977. HP Avondale designed and manufactured gas chromatography (GC) instrumentation. My initial responsibilities were to design the electronics and the software for digitizing and analyzing the chromatograms. From that grew the need for a better understanding of the chromatographic limits to separation performance and speed of analysis. I was also asked to figure out the effect of peak focusing by the velocity gradients within a column on the column performance - a controversial issue at the time.

Q. Why was this?

A: It is a fascinating story, but, first, I would like to clarify that, in all forthcoming answers, I distinguish the peaks in chromatograms (their widths are measured in the time units) from the bands of analyte within a column (their widths are measured in the distance units). In the late 1980s, a group of inventors approached HP with a proposal to substantially improve the speed of GC analysis by using the dynamic focusing (negative temperature gradients moving from the column inlet to the outlet). An enormous increase in the speed of analysis without losing resolution was expected (120 min PONA analysis would be reduced to a few minutes). The idea was that the negative temperature gradients along the column create the negative velocity gradients causing the front of an analyte band migrating along a column to move slower than its tail. This compresses the bands making them narrower in distance along the column, and thus presumably improves the separationâspeed trade-offs. Ray Dandeneau, a co-inventor of fused silica capillary columns and R&D manager at the time, asked me to contact the inventors for a detailed exploration of the potentials. After preliminary discussions, it was decided that HP would help the inventors to construct the experiments, and I began, with help from Terry Berger, to search for theoretical answers. Soon I found that the dynamic focusing was an old idea proposed by Russian scientist Zhukhovitskii1 in 1951 before the invention of conventional temperature-programmed GC.2 Since then, high expectations from dynamic focusing had been periodically flaring up and dying down again from time to time with no definitive experimental improvement and no theoretical solutions. As others before them, our experiments did not show any definitive improvement. The explanation given by the inventors was that the experiments were not good enough and Terry and I continued the search for definitive theoretical answers. In 1992, we published a theoretical paper3 on the fundamentals of the effects of the velocity gradients. I was lucky to have the opportunity to have an encouraging discussion of the paper with the late Prof. Giddings during the 1992 International Conference on Chromatography in AixâenâProvince, France. Later, I published a more general theory4,5 and then two theoretical papers6,7 demonstrating that, ideally, the velocity gradients can only harm the separation-speed trade-offs in chromatography, although the gradients can reduce the harm from some non-ideal conditions like poor sample introduction. These general conclusions can be explained this way. The same velocity gradients that compress the analyte bands (make them narrower in distance along the column) also reduce the distance between the bands and slow down the speed of their migration and elution. The latter two factors reduce the separation of the bands and broaden the peaks in chromatograms. Together, the three conflicting factors (band compression, worsening of their separation, and their slower elution) will at best cancel each other out; at worst they will reduce the separation-speed tradeâoffs. After my involvement in the focusing study, my expertise in GC theory became one of my main responsibilities at HP.

Q. Could you briefly talk about the work you performed and what you helped develop while you were at HP?

A: My first job assignment was to develop an analog-to-digital converter (ADC) for the first stand-alone digital integrator in the industry, which was under development at the time. This was followed by development of dataâprocessing and presentation software for another integrator that the company introduced later, and then by defining the speedârelated aspects for the nextâgeneration high speed GC instrument - the first in the industry with fully integrated electronic pressure and flow control. As part of that assignment, I coâinvented ADC for GC8 - the fastest at the time for the needed resolution - and a GC method translation concept.9,10 Later, I developed a GC method translation software that became a popular method development tool. I also co-invented the retention time locking (RTL) concept11,12,13 - the basis for another useful software tool introduced by HP. The tool enabled an order of magnitude improvement in GC retention time reproducibility.

Q. You continued to work on the development of fast and multiâdimensional GC. What do you feel were some of your most important publications on these topics?

A: 1D GC (one-dimensional GC): I completed the publication of a four-part series on the theory of fast GC,14–17 and co-authored several papers on heating rate optimization in GC.18,19

GC×GC (comprehensive two-dimensional GC): In my first GC×GC lecture (25th International Symposium on Capillary Chromatography, Riva del Garda, Italy, 2002, published in 200320), I outlined the first (as far as I know) theory of optimization of GC×GC, and theoretically demonstrated that the separation performance of GC×GC can potentially be orders of magnitude higher than that of 1D GC. However, I also demonstrated that, because of insufficiently sharp modulation, the GC×GC systems available at the time were not better than their 1D counterparts. This caused heated debates,21,22 lasting for many years and summarized in Pat Sandra’s 2007 lecture23 at the 4th International GC×GC Symposium (Dalian, China, 2007), published in 200824 (with several coâauthors including myself). Years later, better modulators became available, and the key problems of GC×GC configuration were fixed (all as I was preaching for years). Several years ago, Jack Cochran described (International GC×GC Symposium, Riva del Garda) the first GC×GC system known to me at the time that performed near its theoretical potential. The system evaluation was presented by Matthew Klee at the 11th International GC×GC Symposium (Riva del Garda, 2014) and published in a paper that I initiated and co-authored.25 I am also thankful to Luigi Mondello for inviting me to contribute a chapter on theory and optimization of GC×GC coupled to mass spectrometry (MS) for his volume on the same topic published in 2011.26

Q. In 2010 you published TemperatureâProgrammed Gas Chromatography, which is a very comprehensive publication covering many aspects of GC theory.27 How did this book come about?

A: Although temperature programming is a key factor of a column’s performance in GC, no books on this topic had been published since 1966.28 I had been thinking about publishing such a book since the mid-1990s. I began the writing in 2003. Up until the book was published,27 I did not work on much else. Unfortunately, I did not include in the book everything I wanted (performance metrics, optimization), but the time to stop came. I believe, however, that what’s covered is done reasonably well. In addition to good published reviews, several colleagues verbally expressed pleasant comments. Later, Colin Poole gave me an opportunity to publish a summary of the performance metrics and the optimization in a theoretical chapter of the volume on GC that he edited.29

Q. Your most recent publication focused on optimizing the mixing rate in linear solvent strength gradient liquid chromatography (LC),30 could you briefly explain what the mixing rate is and its importance?

A: The mixing rate is the rate of the temporal increase (the increase with time) of the volume fraction of stronger solvent in LC mobile phase. The mixer is the device changing the solvent composition. From that perspective, gradient LC can be viewed as LC with programmed solvent mixing. It is well known that the analysis time of this technique can be substantially shorter than that of isocratic LC (where the mobile phase composition does not change during the analysis). The term mixing rate, which was recently introduced by Gert Desmet and myself,30 is a synonym of the wider know terms gradient slope and time steepness of the gradient. Why the new term? The solvent strength programming causes two types of solvent strength change within an LC column - the temporal change and the spatial one (the change along the column). It is important to recognize the difference between these two types of changes because they affect the column performance differently (see below). Using the term rate for the temporal changes, and the term gradient only for the spatial ones, helps to reinforce the distinction, and to separately evaluate the effects of each phenomenon. Using this distinction, I was able to demonstrate theoretically31 that sharpness of chromatographic peaks and the resulting speed improvement in gradient LC compared to isocratic conditions comes from the temporal changes while the effects of the gradients are practically insignificant under typical conditions. It is important for the clarity of terminology that the term mixing rate that represents only the temporal changes does not include the words like “gradient” and “slope”, which have spatial implications. From a broader perspective, gradient LC is a special case of chromatography with dynamic focusing, and all conclusions regarding the general case6,7 apply to LC. Interestingly, in line with predictions of general theory of dynamic focusing,6,7 the gradient band compression typically broadens the peaks slightly30 rather than focusing (narrowing) them as frequently expected.

Q. When you optimize anything there is a trade-off between parameters. These changes are based on the optimization goal. What exactly was the optimization goal within this research?

A: The optimal mixing rate in LC (as well as the optimal heating rate in GC) is defined19,29,32 as the one at which a required separation performance is obtained in the shortest time.

Q. What practical recommendations have come out of this research?

A: In essence, the optimal mixing rate (OMR) mostly depends only on the void time and on the molecular weights of the sample components. What’s nice is that the OMR does not directly depend on column dimensions, its solid support structure, or the solvent type, but only through their effect on the void time. This makes it possible to reduce the optimization results down to specific numerical recommendations. Thus, for small-molecule samples, the solvent strength increase of about 5% per time increment equal to the void time is optimal or close to optimal for all LC analyses that can be approximated by the LSS (linear solvent strength model). The per-void-time increment for proteins should be about 10 times smaller. This is similar to the optimal heating rate of 10 ºC per void time in GC.19,29

Q. What are you currently researching?

A: I am grateful for having the opportunity to work with Gert Desmet - a leading expert in theory of gradient LC. We are both interested in optimization of the technique. A key to the optimization is the simple and transparent performance metrics. Together, we published a paper on the metrics of separation performance in gradient LC30 and on the optimal mixing rate32 in a simple single-ramp solvent strength program. Currently, we are working on the optimization of more complex mixing programs.

Q. What do you regard as the most exciting areas of chromatography at the moment?

A: In my view, comprehensive multidimensional techniques are the most promising developments in column chromatography (LC, GC, etc.). The GC×GC “is probably the most promising invention in GC since discovery of capillary columns.”24 The overall performance of a chromatographic analysis is a trade-off between three factors - the separation performance, the time, and the detection limit (DL). Here’s an example from GC with which I am more familiar: Each twofold peak capacity increase in 1D GC without changing the DL costs eightfold longer analysis time (1 h analysis becomes 8 h analysis). On the other hand, adding the second dimension can increase the peak capacity by more than an order of magnitude without changing the analysis time and DL. Currently, not all potentials of GC×GC are exploited. Thus, peak deconvolution substantially increases the separation performance of 1D GC and GC×GC. However, only the deconvolution along the second dimension is currently used in GC×GC. Adding the deconvolution to the first dimension of GC×GC can further increase its overall separation performance several times.20,26 Unfortunately this approach remains mostly unknown.

Q. Will the evolution of mass spectrometry eventually lead to the extinction of chromatography?

A: I am not an expert in mass spectrometry (MS) and in the multi-stage MS techniques. However, the way I see this world, there will always be the need for the separation of more and more complex mixtures. The improvement in the separationâtimeâDL trade-offs in multiâdimensional chromatography and in multi-stage MS will always complement each other, but more separation power will always be needed. Could you imagine a day when there will be no practical need for further substantial improvement in computer speed or memory, or in the speed of the Internet? I think the same is true for the separationâtime-DL performance of analytical tools.

References

- A.A. Zhukhovitskii, O.V. Zolotareva, V.A. Sokolov, and N.M. Turkel’taub, Doklady Akademii Nauk S.S.S.R. 77, 435–438 (1951).

- J. Griffiths, D. James, and C.S.G. Phillips, The Analyst77, 897–904 (1952).

- L.M. Blumberg and T.A. Berger, J. Chromatogr. 596, 1–13 (1992).

- L.M. Blumberg, J. Chromatogr.637, 119–128 (1993).

- L.M. Blumberg, J. High. Resolut. Chromatogr.16, 31–38 (1993).

- L.M. Blumberg, Anal. Chem. 64, 2459–2460 (1992).

- L.M. Blumberg, Chromatographia39, 719–728 (1994).

- L.M. Blumberg, J. Bush, and R.P. Rhodes, U.S. patent 5,448,239, 1995.

- W.D. Snyder and L.M. Blumberg, U.S. patent 5,405,432, 1995.

- L.M. Blumberg and M.S. Klee, Anal. Chem. 70, 3828–3839 (1998).

- M.S. Klee, P.L. Wylie, B.D. Quimby, and L.M. Blumberg, U.S. patent 5,827,946, 1998.

- M.S. Klee, B.D. Quimby, and L.M. Blumberg, U.S. patent 5,987,959, 1999.

- L.M. Blumberg, B.D. Quimby, and M.S. Klee, U.S. patent 6,153,438, 2000.

- L.M. Blumberg, J. High. Resolut. Chromatogr.20, 597–604 (1997).

- L.M. Blumberg, J. High. Resolut. Chromatogr.20, 679–687 (1997).

- L.M. Blumberg, J. High. Resolut. Chromatogr.22, 403–413 (1999).

- L.M. Blumberg, J. High. Resolut. Chromatogr.22, 501–508 (1999).

- L.M. Blumberg and M.S. Klee, Anal. Chem.72, 4080–4089 (2000).

- L.M. Blumberg and M.S. Klee, J. Microcolumn Sep.12, 508–514 (2000).

- L.M. Blumberg, J. Chromatogr. A985, 29–38 (2003).

- C.M. Harris, Anal. Chem.74, 410A (2002).

- L.M. Blumberg, Anal. Chem.74, 503A (2002).

- P. Sandra, F. David, M.S. Klee, and L.M. Blumberg, “Comparison of one-dimensional and comprehensive two dimensional capillary GC separations,” Dalian, China, 4–7 June 2007, (CD ROM).

- L.M. Blumberg, F. David, M.S. Klee, and P. Sandra, J. Chromatogr. A1188, 2–16 (2008).

- M.S. Klee, J.W. Cochran, M. Merrick, and L.M. Blumberg, J. Chromatogr. A1383, 151–159 (2015).

- L.M. Blumberg, in Comprehensive Chromatography in Combination with Mass Spectrometry, L. Mondello, Ed. (Wiley, Hoboken, NJ, USA, 2011), pp. 13–63

- L.M. Blumberg, Temperature-Programmed Gas Chromatography (Wiley-VCH, Weinheim, Germany, 2010).

- W.E. Harris and H.W. Habgood, Programmed Temperature Gas Chromatography (John Wiley & Sons, Inc., New York, USA, 1966).

- L.M. Blumberg, in Gas Chromatography, C.F. Poole, Ed. (Elsevier, Amsterdam, 2012) pp. 19–78.

- L.M. Blumberg and G. Desmet, J. Chromatogr. A1413, 9–21 (2015).

- L.M. Blumberg, Chromatographia77, 189–197 (2014).

- L.M. Blumberg and G. Desmet, Anal. Chem.88, 2281–2288 (2016).

Leon Blumberg graduated from the Leningrad Electrotechnical Institute (Leningrad, USSR; currently St Peterburg, Russian Federation) in 1960 with a diploma in electrical engineering and went on to join a computer design company in Leningrad. In 1961–1965, Leon completed a full math course for professional mathematicians from Leningrad University. He obtained a PhD equivalent in electrical engineering from Leningrad Electrotechnical Institute of Telecommunications. In 1977, Leon immigrated to the US with his family, joining the Avondale Division of HewlettâPackard Co. (now Agilent Technologies). Currently, as part of his consulting company Advachrom, Leon provides consulting services mostly in GC×GC. His main scientific interest now is to develop a unified theory of temperatureâprogramme GC and

gradient LC.

E-mail:leon@advachrom.com

Thermodynamic Insights into Organic Solvent Extraction for Chemical Analysis of Medical Devices

April 16th 2025A new study, published by a researcher from Chemical Characterization Solutions in Minnesota, explored a new approach for sample preparation for the chemical characterization of medical devices.

Sorbonne Researchers Develop Miniaturized GC Detector for VOC Analysis

April 16th 2025A team of scientists from the Paris university developed and optimized MAVERIC, a miniaturized and autonomous gas chromatography (GC) system coupled to a nano-gravimetric detector (NGD) based on a NEMS (nano-electromechanical-system) resonator.

Miniaturized GC–MS Method for BVOC Analysis of Spanish Trees

April 16th 2025University of Valladolid scientists used a miniaturized method for analyzing biogenic volatile organic compounds (BVOCs) emitted by tree species, using headspace solid-phase microextraction coupled with gas chromatography and quadrupole time-of-flight mass spectrometry (HS-SPME-GC–QTOF-MS) has been developed.

A Guide to (U)HPLC Column Selection for Protein Analysis

April 16th 2025Analytical scientists are faced with the task of finding the right column from an almost unmanageable range of products. This paper focuses on columns that enable protein analysis under native conditions through size exclusion, hydrophobic interaction, and ion exchange chromatography. It will highlight the different column characteristics—pore size, particle size, base matrices, column dimensions, ligands—and which questions will help decide which columns to use.