An Overview of the Untargeted Analysis Using LC–MS (QTOF): Experimental Process and Design Considerations

Untargeted analysis using liquid chromatography with tandem mass spectrometry (LC–MS/MS) chemical profiling has become a valuable tool for providing insight into the variations found in a sample set. However, the large data sets often generated, frequently yielding upwards of 20,000 features or more, can be overwhelming. Key elements in creating an experimental design are one of the most pivotal aspects of verifying statistical variance in a sample set. Therefore, care should be taken when an experimental design is developed and when statistical tools are used to perform data reduction and processing.

People have always wanted to expand their limits of knowledge. Regarding chemical analysis, the use of an untargeted approach with high-resolution accurate mass instrumentation can aid in the process of gaining a more comprehensive understanding of sample composition, compared to a targeted approach. Untargeted analysis is a technique that focuses on global chemical profiling. It is considered a hypothesis-generating or a discovery approach. However, this pursuit of expanded knowledge has some advantages and disadvantages relative to targeted analysis.

For a typical targeted approach to chemical analysis using liquid chromatography-mass spectrometry (LC–MS), the user would perform absolute quantitation using a triple quadrupole (TQ) or single quadrupole (SQ) on a subset of analytes known to potentially be in the sample. The target list of compounds that are being evaluated to determine they are present does not comprise everything that could be in the sample, but includes key features that are of interest to a specific industry. For example, the determination of pesticides in water resources is of high interest. For this process, a nominal mass or unit resolution mass instrument is acceptable, because the analyte of interest is preselected and standards are available to confirm retention times, the precursor ion, and, when using TQ, the product ions. The workflow is centered around verifying the presence of an analyte and calculating the concentration.

Alternatively, untargeted analysis is considered a more comprehensive approach. Less selective sample preparation techniques allow for a range of chemical compounds to be analyzed together. A goal is to broaden our understanding of a biological process, discover variations in a sample set, or just gain a deeper understanding of a sample. For example, researchers have used untargeted analysis to explore the environmental impacts on bacterial growth through the analysis of the extracellular metabolite profiles (1). This technique has also been applied to beer styles to determine the key chemical components that define different styles of beer (2). While an untargeted approach can obtain all the data to paint a more detailed picture of the sample set, it comes with some challenges. Untargeted analysis requires high-resolution accurate mass instrumentation such as a quadrupole time of flight (QTOF) mass spectrometer. Such instrumentation is more costly than instrumentation options with a lower resolving power. Additionally, the large data sets require extensive data processing that can take weeks to months, versus targeted analysis data, which typically only requires a few hours to process. Due to the increased challenges associated with untargeted analysis, a highly skilled scientist is required to operate the instrument in addition to collecting and analyzing the data. Moreover, programming skills are frequently required to write specialized in-house applications to analyze the data. Therefore, untargeted analysis is primarily limited to the research and development stage at this point. The workflow for untargeted analysis is centered around the experimental design with only one independent variable being modified between experimental groups to easily associate statistically significant features with the independent variable.

When implementing untargeted analysis, statistical tools are typically used to measure variability within a sample set. Therefore, it is important to consider where variability can be introduced and proactively take steps to prevent introducing unwanted variability while planning the experimental design and batch sequence. For example, when evaluating the environmental impacts on the extracellular metabolite profile of bacteria, the same strain of bacteria should be used throughout the study. Additionally, the conditions such as the time point in the growth curve that the bacteria are collected, and the growth environment temperature should be consistent. Finally, the setup used to replicate the environmental conditions should be consistent. In other words, enough media should be prepared in one setting for all replicates of each type of bacteria. Furthermore, enough mobile phase should be prepared for the entire batch sequence to eliminate variability between different preparations. These extra precautions aid in minimizing the variability detected with statistical tools.

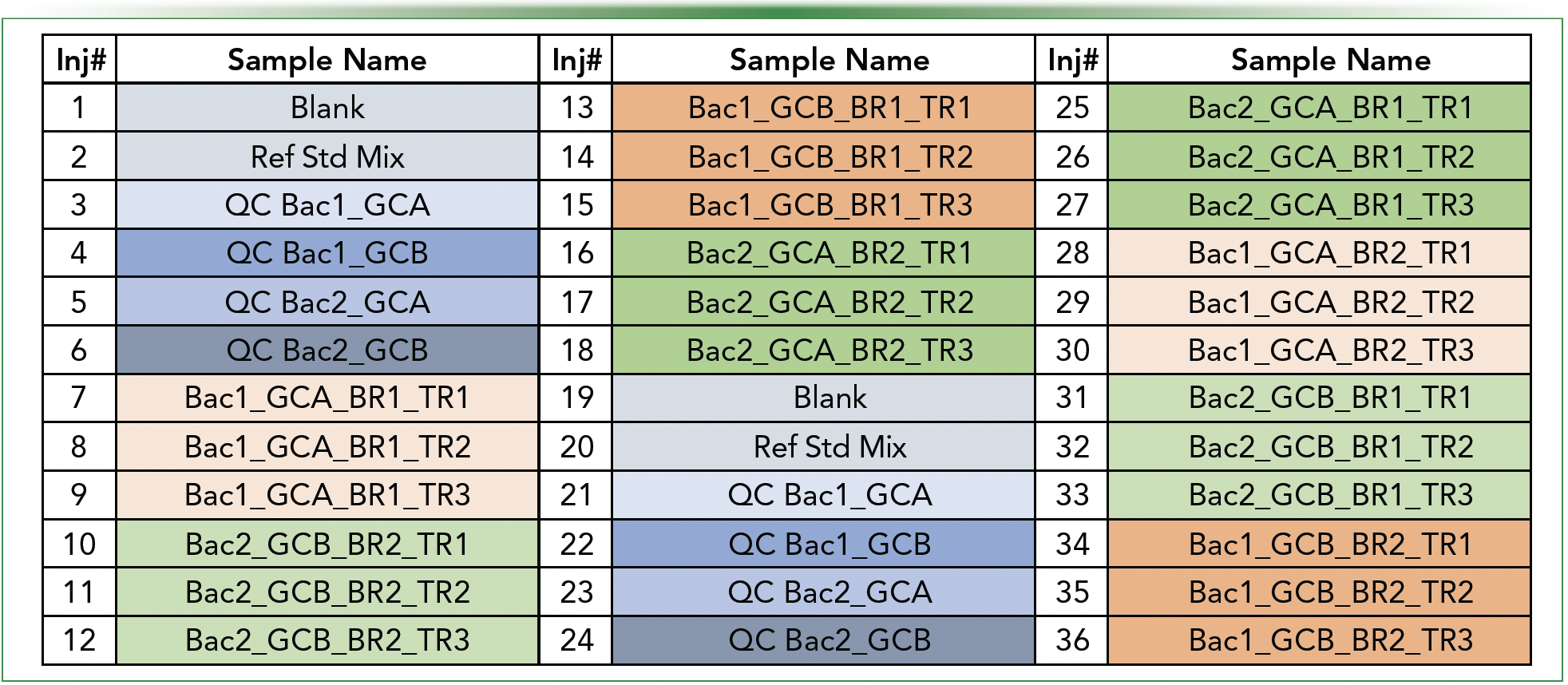

When the experiment is designed, replicates should be included for redundancy to ensure measured variations are reproducible. Biological replicates increase the robustness of the finding and show biological variation. Technical replicates (also known as analytical replicates) encompass the entire experimental procedure and involve repeat injections of the same extract. The combination of replicates provides confirmation that the measured variation is real. The use of quality controls is critical to monitor the performance and stability of the instrument. A quality control sample can further validate measured variation across experiments. Ideally, pooled samples (prepared from equal portions of each sample) are evaluated throughout the batch, as well as various reference standards that cover a wide m/z range to ensure mass accuracy for all observed ions (3). Finally, randomization in the batch sequence provides additional confirmation that the measured variation is credible (Table I). While minor variation will still potentially be measured in statistical tools such as principal component analysis (PCA), variation between sample sets should be significant and easily identifiable (Figure 1).

TABLE I: Batch for a hypothetical scenario evaluating two biological replicates (BR1 & BR2) of two bacteria (Bac1 [orange] and Bac2 [green]) in two different growth conditions (GCA and GCB) with three technical replicates (TR1, TR2, and TR3) for each BR. TR analyzes the sample three times for each sample vial prepared. QCs are prepared by mixing equal parts of BR.

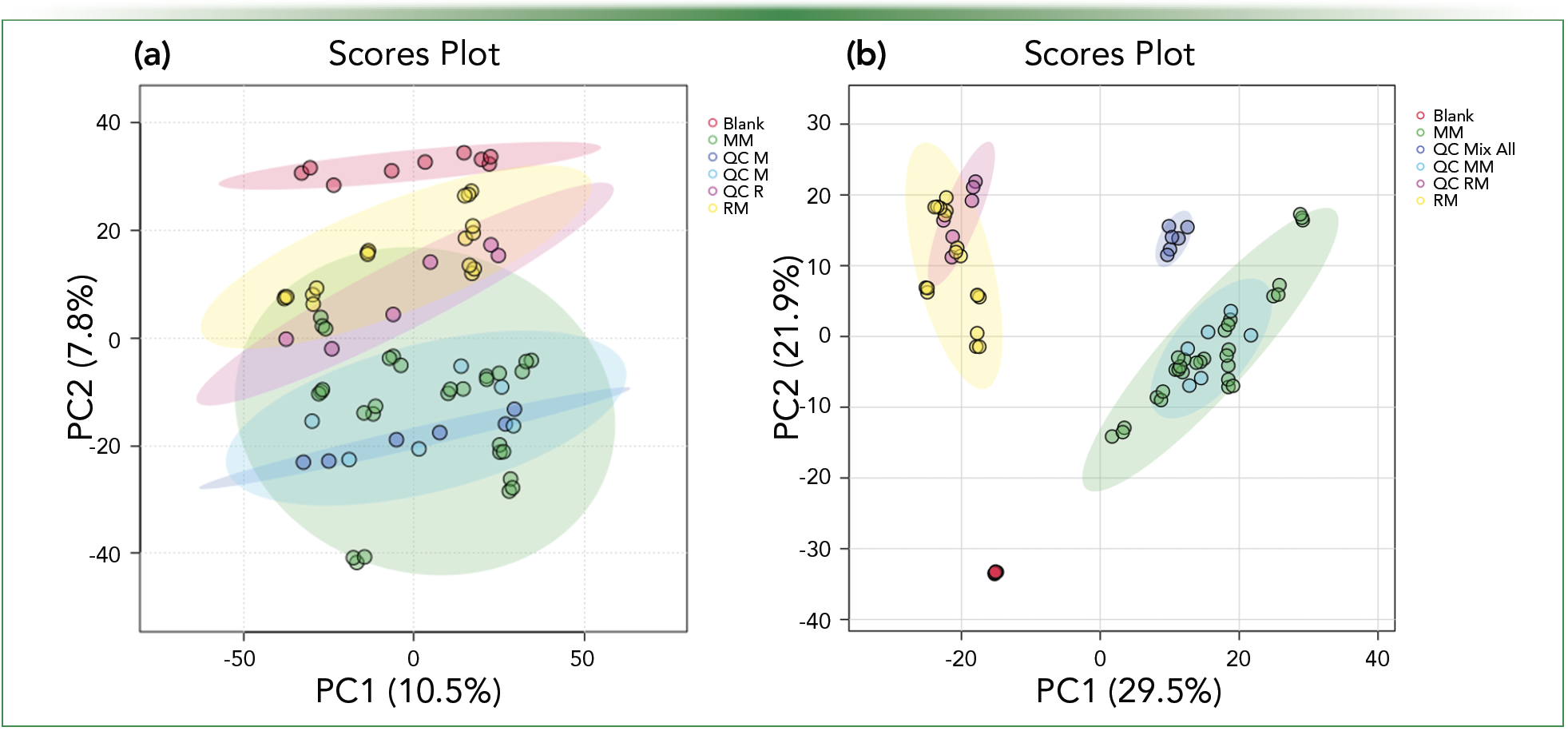

FIGURE 1: Variation between replicates and retention time (RT) setting impact on scores plot. Impacts of RT and m/z window tolerance on sample alignment and visualization. Note that (a) has an RT tolerance of 0.02 min vs. (b) of 0.05 min. In (b), statistical variation between samples is visible as well as variation between replicates.

After the experimental design has been implemented and samples analyzed, the next focus becomes data analysis, which includes bucketing, data reduction, data alignment, statical analysis, feature picking, and feature annotation. Open-source software, such as MS-DIAL (4), can be used to aid in the data processing stage. This type of software allows several data analysis steps to be completed at the same time.

The first step, bucketing, consists of slicing or grouping data from each sample into retention time (RT):m/z pairs and can be completed by setting a peak and mass width. Evaluating the m/z differences of the calibrant and reference solution to the exact mass can provide a foundation for the m/z slice width. Once data from each sample has been bucketed, the next steps are data reduction and data alignment based on MS1 results. Data reduction is continuous throughout the data analysis process, but it can begin by defining an acquisition time and m/z range to eliminate features that elute in the void volume or during column re-equilibration. Next, the data are aligned to evaluate the variation or statistical differences within the sample set. When aligning data, the window or range of the RT and m/z tolerance will directly impact the alignment of the samples (Figure 1). The variation measured in m/z and RT of the standard solution should be evaluated to verify that set tolerances are acceptable. Finally, further data reduction can be completed during the data alignment step by electing to remove features detected that are also found in the blanks and have a fold change under a prescribed limit.

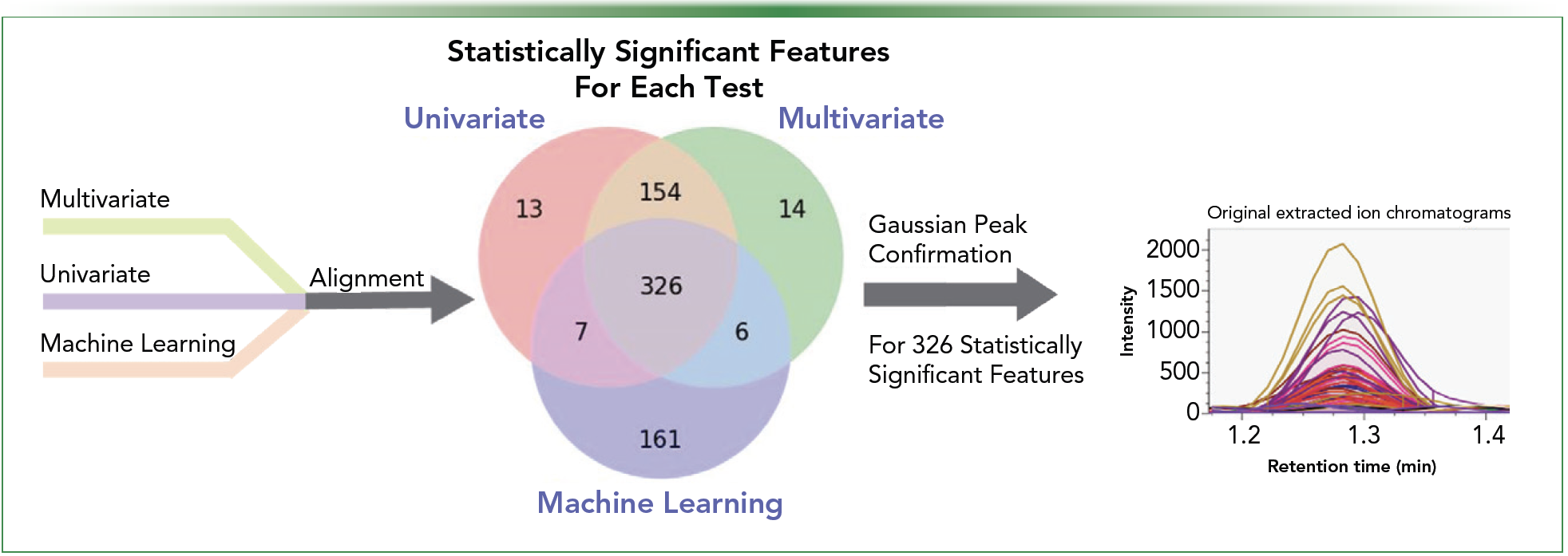

Once samples have been aligned, the goal is to determine differences between samples and highlight key features. A comparison between data from each sample is completed typically using statistical tools to visualize which characteristics are most influential in the differentiation of samples. Open-source software, such as MetaboAnalyst and MS-DIAL, can aid in this process (4). This process can further aid in data reduction by determining which features are significant enough to devote time to annotating. Focusing primarily on statistically significant features (p-value < 0.05) is beneficial in reducing extraneous data. It is critical that the statistical significance of features is determined using more than one statistical approach, such as univariate analysis, multivariate analysis, and machine learning (Figure 2). Each technique can potentially introduce a unique bias; therefore, the inclusion of multiple tools prevents overfitting the data (5,6). Once data reduction is complete and the key features that are the most statistically significant are determined, confirmation of a Gaussian chromatographic peak should then be verified. Each feature can be defined as a compound once a quality signal for a feature has been verified.

FIGURE 2: Feature reduction using statistical tools.

The last (and possibly the most challenging) step of the process is annotation. Authors should clearly differentiate and report the level of identification rigor for all compounds reported. The Metabolomics Standards Initiative (MSI) has defined four levels of identification (7,8). Level 1 is the highest level consisting of identified compounds using two or more orthogonal properties and a chemical standard that is compared to experimental data from the same laboratory and analytical methods. Level 2 refers to putatively annotated compounds. For these, matching chemical standards is not required. Annotation is based upon physicochemical properties or spectral similarity with public or commercial spectral libraries. Putatively characterized compound classes are referred to as Level 3. In this group, compounds are classified based on characteristic physicochemical properties of a chemical class of compounds, or by spectral similarity to known compounds of a chemical class. Frequently, only the elemental formulas are known. Finally, the most common is Level 4: unknown compounds. These are “unique unknowns” differentiated based upon unique experimental spectral or chromatographic features (RT:m/z pair).

While untargeted analysis is a time-consuming process that requires a highly skilled scientist, it can provide a wealth of new information and open the doors to new horizons. Untargeted analysis can be used to define features for the discrimination between samples or identify a particular sample difference. For example, having a broader range of metabolites for food authentication confirmation. Having these features, one can then devise a targeted chemical analysis through the development of MRMs for important features, thus making the desired determination routine. Despite the many hurdles and challenges that a scientist must overcome through this inductive approach, many nuggets of gold can be retrieved from the mountain of information.

References

(1) Zanella, D.; Liden, T.; York, J.; Franchina, F. A.; Focant, J. F.; Schug, K. A. Exploiting Targeted and Untargeted Approaches for the Analysis of Bacterial Metabolites under Altered Growth Conditions. Anal. Bioanal. Chem. 2021, 413 (21), 5321–5332. DOI: 10.1007/s00216-021-03505-2

(2) Anderson, H. E.; Liden, T.; Berger, B. K.; Zanella, D.; Manh, L. H.; Wang, S.; Schug, K. A. Profiling of Contemporary Beer Styles Using Liquid Chromatography Quadrupole Time-of-Flight Mass Spectrometry, Multivariate Analysis, and Machine Learning Techniques. Anal. Chim. Acta 2021, 1172, 338668. DOI: 10.1016/j.aca.2021.338668

(3) Broadhurst, D.; Goodacre, R.; Reinke, S. N.; Kuligowski, J.; Wilson, I. D.; Lewis, M. R.; Dunn, W. B. Guidelines and Considerations for the Use of System Suitability and Quality Control Samples in Mass Spectrometry Assays Applied in Untargeted Clinical Metabolomic Studies. Metabolomics 2018, 14, 72. DOI: 10.1007/s11306-018-1367-3

(4) Tsugawa, H.; Rai, A.; Saito, K.; Nakabayashi, R. Metabolomics and Complementary Techniques to Investigate the Plant Phytochemical Cosmos. Nat. Prod. Rep. 2021, 38, 1729–1759. DOI: 10.1039/d1np00014d

(5) Broadhurst, D. I.; Kell, D. B. Statistical Strategies for Avoiding False Discoveries in Metabolomics and Related Experiments. Metabolomics 2006, 2 (4), 171–196. DOI: 10.1007/s11306-006-0037-z

(6) Saccenti, E.; Hoefsloot, H. C. J.; Smilde, A. K.; Westerhuis, J. A.; Hendriks, M. M. W. B. Reflections on Univariate and Multivariate Analysis of Metabolomics Data. Metabolomics 2014, 10, 361–374. DOI: 10.1007/s11306-013-0598-6

(7) Viant, M. R.; Kurland, I. J.; Jones, M. R.; Dunn, W. B. How Close Are We to Complete Annotation of Metabolomes? Curr. Opin. Chem. Biol. 2017, 36, 64–69. DOI: 10.1016/j.cbpa.2017.01.001

(8) Sumner, L. W.; Amberg, A.; Barrett, D.; et al. Proposed Minimum Reporting Standards for Chemical Analysis: Chemical Analysis Working Group (CAWG) Metabolomics Standards Initiative (MSI). Metabolomics 2007, 3 (3), 211–221. DOI: 10.1007/s11306-007-0082-2

Tiffany Liden and Kevin A. Schug are with the Department of Chemistry and Biochemistry at The University of Texas at Arlington, in Arlington, Texas. Evelyn Wang is with Shimadzu Scientific Instruments in Columbia, Maryland. Direct correspondence to: tiffany.liden@gmail.com

Accelerating Monoclonal Antibody Quality Control: The Role of LC–MS in Upstream Bioprocessing

This study highlights the promising potential of LC–MS as a powerful tool for mAb quality control within the context of upstream processing.

University of Tasmania Researchers Explore Haloacetic Acid Determiniation in Water with capLC–MS

April 29th 2025Haloacetic acid detection has become important when analyzing drinking and swimming pool water. University of Tasmania researchers have begun applying capillary liquid chromatography as a means of detecting these substances.

Prioritizing Non-Target Screening in LC–HRMS Environmental Sample Analysis

April 28th 2025When analyzing samples using liquid chromatography–high-resolution mass spectrometry, there are various ways the processes can be improved. Researchers created new methods for prioritizing these strategies.

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)