Data Handling and Validation in Automated Detection of Food Toxicants Using Full Scan GC–MS and LC–MS

LCGC Asia Pacific

An overview of current chromatography-based food toxicant screening is presented.

During production, processing, storage and transport of food and feed, a variety of potentially hazardous compounds may enter the food chain. These include residues left after treatment of crops and animals with pesticides and veterinary drugs, natural toxins produced by fungi (mycotoxins) and plants, environmental contaminants (persistent pollutants) and processing contaminants. The potential presence of these compounds is an important issue in the field of food and feed safety. Consequently, extensive legislation has been established to protect consumers from unnecessary or excessive exposure to these substances. Analytical chemists are facing a huge challenge when it comes to efficient verification of compliance of a wide variety of products with this legislation and, preferably at the same time, to provide occurrence data for 'new' contaminants which are not yet regulated. In short, hundreds of different products need to be analysed for thousands of known contaminants and more.

Within each class of contaminants, the current 'golden standard' to deal with this challenge is the use of multi-analyte methods based on LC or GC with tandem MS detection. Such methods typically cover tens to several hundreds of analytes and are well established in the field of pesticides,1–3 other fields following the same concept (e.g., mycotoxins4,5 and veterinary drugs6,7 ). The instrumental methods involve targeted acquisition where detection of each compound is individually optimized during instrumental method development. The methods are typically extensively validated with respect to quantitative performance and data handling usually involves a manual review of extracted ion chromatograms to verify peak assignment and integration.

A logical next step is to combine multi-methods beyond their contaminant class. The feasibility of this approach has recently been demonstrated8 by simultaneous determination of pesticides, veterinary drugs, mycotoxins and plant toxins in a variety of food and feed commodities. Sample preparation for such integrated methods is very generic and straightforward (as simple as a single extraction/dilution step). Because the anticipated number of analytes to be covered in this approach is beyond what can easily be accommodated by tandem MS, full scan MS is the detection method of choice. Here the measurement is non-targeted, which makes the instrumental analysis much more straightforward (i.e., no application-specific instrument adjustments or optimization needed, no acquisition-time windows). In principle, the scope of the method is unlimited. As long as analytes elute from the column and are ionized in the source, they can be detected. The moment of setting the scope of the method shifts from before, to after the instrumental analysis.

The conclusion from the trend described above is that both sample preparation and instrumental analysis are becoming more and more generic and, to a certain extent, independent of the analyte of current or future interest. This also means that the main effort in terms of development, optimization and validation is clearly moving from sample preparation and instrumental analysis towards data processing to ensure reliable detection of the compounds of interest.

This paper will provide a brief overview of the full scan MS options currently available for chromatography-based wide-scope screening of food toxicants. Aspects related to automated detection and identification will be discussed based on literature and experiences within the authors' laboratory, with emphasis on data handling. An in-house developed software package (MetAlign), which features instrument independent and uniform data preprocessing and automated identification, will be presented.

General Considerations on Automated Detection/Identification After Full Scan MS Measurement

The raw data files obtained after GC or LC with full scan MS detection, are typically 20–500 Mb and contain information on retention time (1 or 2 dimensional), m/z = 50–1000 (nominal or accurate mass) and abundance (from noise to saturation). Getting meaningful results out of this huge amount of information in an efficient manner is not a trivial task. In principle two approaches can be pursued: non-targeted and targeted data evaluation. With non-targeted data analysis, the raw data file is processed to obtain a list of all individual peaks present in the entire chromatographic run. The number of peaks can be in the 10000s, which rules out a manual identification. Without any a priori information, one could restrict the evaluation to the major peaks, but these are usually originating from non-toxic endogenous compounds, rather than contaminants, which are mostly present at low levels. Another option is to filter out contaminants by comparing overall LC–MS or GC–MS profiles of samples, with profiles obtained after analysis of the corresponding non-contaminated product. For this, extensive databases for the different food commodities would be required, which still need to be generated. Consequently, a more feasible option for the time being is to perform a targeted data evaluation based on libraries containing information on the target compounds. All individual peaks assigned after data (pre)processing can then be matched against the information in the library. Alternatively, the software could use the target library as starting point and restrict the search to compound-specific retention time windows and ion traces from the raw data file. Either way, some sort of match between experimental data and library data is obtained. Depending on the MS device used, this match can be based on a combination of retention time(s), ions ratios, mass spectra, isotope patterns and/or mass accuracy. Criteria will have to be set for each of the match parameters in such a way that the number of so-called 'false negatives' and 'false positives' are within acceptable limits. False negatives being compounds known to be present in the sample but missed by the automated screening method, false positives being compounds known to be absent but appearing on the list of (provisionally) detected compounds.

GC-full Scan MS

Various options for full scan MS detection are available for GC. Single quadrupole and ion trap instruments have been commonly applied for food analysis since the 1990s, especially for the determination of pesticide residues in vegetables and fruits. Sensitivity limitations encountered in the past when using full scan acquisition have been overcome by large volume injection using PTV injectors9 and improvements in MS devices. A big advantage of GC–MS is that the MS spectra obtained after electron ionization are highly characteristic and, to a large extent, are instrument independent. This means that spectra generated elsewhere can be used and that libraries can be purchased. Despite the screening potential, the instruments were and still are mainly used for quantitative analysis rather than for screening. One reason for this is that the spectra of the analytes in the sample often contain interfering ions from other compounds, which complicates automated matching against library spectra. To a certain extent, the quality of sample extraction can be improved by software alogorithms, such as deconvolution, that resolve spectra from overlapping peaks and background. Deconvolution software tools are available both commercially and as free downloads (for example, AMDIS from NIST10 ). A second reason for the limited exploitation of the screening potential is the lack of dedicated sofware for automatic detection. The standard sofware that comes with the GC–MS instrument is usually able to perform searches of sample spectra against libraries, but is often not really suited and not user-friendly with respect to automated identification and report generation in routine practice. This has caused users to develop in-house solutions without11,12 or with deconvolution.13,14 For the user, deconvolution tools that are integrated into the instrument's data analysis software are most convenient. Some instrument manufacturers offer this as default15 or as an optional package combined with dedicated libraries that not only include the mass spectra but also retention times for prescribed GC conditions.16 For extracts of increasing complexity and/or in case of lower analyte levels, GC with full scan quadrapole or ion trap MS lacks selectivity, even when applying preprocessing algorithms such as deconvolution. Currently, there are two options to improve selectivity in GC with full scan MS. The first is the use of high resolution/accurate mass MS detectors (GC–hrTOF-MS). The higher selectivity arises from the ability to separate ions that have the same nominal mass but differ in their exact mass. A main disadvantage is that, to take full advantage of this, exact mass library spectra are needed. Because these are not (yet) available, they would need to be generated by the user. In addition, the current mass resolving power (~6000) and dynamic range are not adequate for all applications. The second option to improve selectivity is through enhanced chromatographic resolution. The current-state-of-the-art here is comprehensive GC (GC×GC). Compounds are typically first separated by volatility on a regular GC column and then, by polarity, on a second short narrow-bore column. A visualization of the resulting separation is shown in Figure 1. For full scan MS detection a high scan speed is required (~100–250 Hz) in order to have sufficient data points across the narrow (100 ms) chromatographic peaks. TOF-MS detectors allowing scan speeds up to 500 Hz are available (nominal resolution only). Cleaner spectra are obtained in the first place due to the enhanced GC separation and also here data preprocessing for peak purification can be performed. Given the comprehensiveness of this type of analysis, its main application lies in profiling and classification of samples in various areas including metabolomics, petrochemicals, food and environmental,17 but several applications for qualitative and quantitative determination of residues and contaminant have been reported.18–20 Due to the complexity and the amount of data obtained after comprehensive GC analysis, data handling is a major challenge. Both proprietary software and in-house developed software tools for data handling have been described. They have been excellently reviewed by Piece et al.21 At the moment, no general 'ready-to-use' solution is available for automated detection. Consequently, in our laboratory data handling after GC×GC–TOF-MS analysis is performed using a combination of the instrument software for initial processing and deconvolution and an Excel macro for matching retention times, data reduction and generation of a list of detected compounds. Currently, an in-house developed alternative for preprocessing/resolving overlapping peaks called MetAlgin is being evaluated.

Figure 1: Three-dimensional GCÃGCâTOFâMS image obtained after a 10 µL injection of a standard solution containing >200 pesticides (for more details see reference 19).

LC-full Scan MS

For full scan measurement of low levels of toxicants in food and feed after LC separation, high resolution/accurate mass MS detectors are required. During ionization, little or no fragmentation occurs and compounds are detected through the accurate mass of their (de)protonated molecule or adduct. TOF-MS has been used in most investigations. Especially over the past few years, resolution, mass accuracy, dynamic range, scan speed and sensitivity have greatly improved. In addition, a single stage Orbitrap-MS has been introduced, making a resolving power up to 100000 an affordable option for food toxicant analysis.

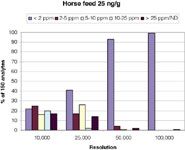

For selective and efficient automated detection, a reliable high mass accuracy is essential. The instrument specifications are typically within 5 ppm or even better. However, in practice this mass accuracy can only be achieved when co-eluting compounds with the same nominal mass can be mass spectrometrically resolved. Consequently, for real-life samples the resolving power of the MS is an important parameter as well. This has been shown recently by Kellmann et al.22 The resolving power required depends on the application, that is, on the complexity of the extract (sample complexity, sample preparation), the analyte (sensitivity) and its concentration. For generic extracts of honey, a resolving power of 25000 was sufficient for obtaining a mass accuracy of <2ppm for pesticides, natural toxins and veterinary drugs, down to the 0.01 mg/kg level. For a much more complex animal feed matrix 100000 was needed. The effect of resolving power on assigned mass accuracy is illustrated in Figure 2.

Figure 2: Effect of resolving power on mass assignment of residues and contaminants (0.025 mg/kg) in a complex compound feed matrix (for details see reference 22).

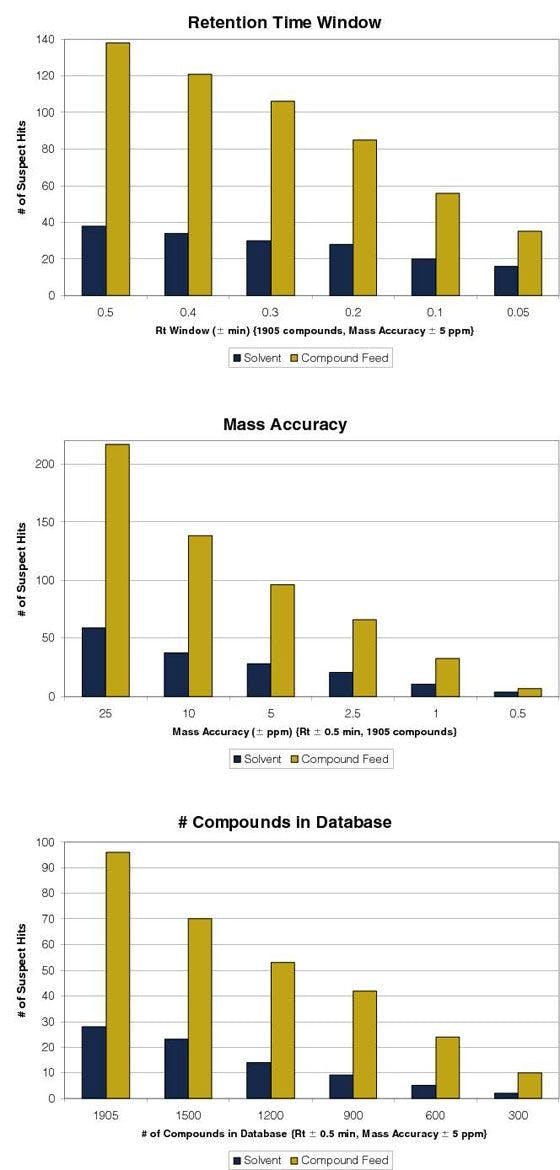

While the hardware to enable screening is becoming more and more fit-for-purpose, development of software and databases for automated detection is still in full progress, with major improvements being made in the past two years. In its simplest form, analytes can automatically be detected in the raw data through matching the accurate mass of peaks found against a list of target compounds with their exact mass and retention time. However, accurate mass and retention time alone are often not sufficiently unique for selective automatic identification. Depending on the tolerances set for matching retention time and accurate mass, the response threshold, the complexity of the matrix and the number of compounds in the target database, the number of potential detections triggering further confirmatory (data) analysis may be too high for screening purposes. This is illustrated in Figure 3. To increase specificity, isotope patterns can be used. Like the exact mass, they can be calculated and no prior experimental determination is required. However, for small molecules the isotope ions (except chlorine and bromine isotopes) have substantially lower abundance, thereby increasing the limit of identification. Another option to improve specificity in automated detection is through analyte fragments. Such fragments can be induced during ionization (so-called in-source collision induced ionization, IS-CID) or in a collision cell (e.g., a quadrupole of a QTOF) with the remark that no precursor ion selection is performed and fragmentation is done using fixed generic fragmentation conditions. The formation of fragment ions, to some extent, but certainly their relative abundance, depends on the instrument and conditions applied. Therefore, in contrast to GC–MS spectral databases, fragmentation patterns can not easily be extrapolated and used in other laboratories and will need to be generated by the user (or vendor) for each instrument.

Figure 3: Effect of retention time tolerance, mass accuracy tolerance and number of entries in the target library on the number of provisionally detected compounds in a non-contaminated samples (low response threshold). Sample preparation details (see reference 8). LCâMS analysis (see reference 22).

Toward Generic Data Processing and Data Mining

While methods for generic extraction and subsequent full scan instrumental analysis are maturing, universal approaches for data (pre)processing, detection of toxicants and formats for archiving preprocessed result files hardly exist. This means that the data files containing comprehensive information on a wide variety of food and feed samples and the (un)known toxicants they may contain, can only be (further) explored by the laboratory that generated the data. That laboratory might only be interested in pesticides (and just perform a targeted data analysis limited to that), while others might be interested in natural toxins in the same commodity. Furthermore, libraries used for automated detection may differ in scope, which affects the output. The issue, as such, is not unique for food toxicant analysis but, unlike other areas such as metabolomics, has hardly been addressed so far. In our institute, as a spin-off from development of data handling software for application in the field of metabolomics, preprocessing tools are being applied and dedicated search tools have been developed for food toxicant screening. The preprocessing tool, called MetAlign, is freely available and described in detail elsewhere.23 One of the main features of MetAlign is that it can handle, either direct or after automated conversion, data from different vendors covering a broad range of GC–MS and LC–MS instruments, both with nominal and accurate mass. Data preprocessing involves baseline correction, noise elimination and peak picking. The software also deals with detector saturation and brand and instrument specific artifacts. The output is a 50–500× reduced data file in a uniform format which can be exported to Excel, or formats allowing visual review through proprietary instrument software already available in the laboratory (see Figure 4). Data alignment can be performed as well, which can be of interest in searching for truly unknowns through comparison of profiles of the same products.

Figure 4: LCâTOF-MS data file (a) before and (b) after preprocessing using MetAlign (see reference 23).

The resulting files can be searched against target databases using dedicated modules for GC–MS, GC×GC–MS (application to be published elsewhere) and LC–MS. Due to the strongly reduced size, files can be easily stored and searching is fast. A comparison of the automated detection performance after GC×GC–TOF-MS between the instruments software and MetAlign/GC×GC–MS search showed that in feed samples spiked with 106 analytes, more compounds (32% vs 62% at the 0.0025 mg/kg level) were found using the MetAlign software, without increase in the number of false positives. A generic approach for data preprocessing can facilitate data sharing and data mining, thereby making much more efficient use of (existing) information available at different laboratories (Figure 5).

Figure 5: Data (pre)processing: MetAlign as interface between instrument raw data and a uniform database for data mining.23

Validation of Chromatography-based (Qualitative) Screening Methods

Once automated detection and reporting have been optimized, the overall method (i.e., sample preparation + instrumental analysis + automated data processing and reporting) has to be validated before it can be applied in routine practice. This essentially means that for a certain matrix (or group of matrices), at the anticipated reporting level, the level of confidence of detection of the target analytes needs to be established. False negatives need to be minimized, for obvious reasons. False positives are undesirable because they will trigger further manual data evaluation and confirmatory analyses which takes time and effort.

Up to now, only explorative evaluation of screening performance has been done using limited numbers of spiked samples or by comparison of the detection rate of the automated screening method with (earlier) results from established quantitative. Lee et al. tested automated identification using a test set of 106 pesticides and contaminants spiked to a complex compound feed sample at various levels, using GC×GC–TOF-MS.19 Detection rates were clearly concentration dependent and varied from 100% to 73% to 17% for 0.10, 0.01 and 0.001 mg/kg, respectively. Mezcua et al.24 investigated the potential of LC–TOF-MS based screening for pesticides in vegetables and fruits. Automated detection was done using an in-house compiled database and instrument software. Most pesticides found by the conventional LC–MS–MS method were also automatically found by the LC–TOF-MS screening method.

Very recently, two groups did a more extensive verification of automated detection of pesticides using GC–MS (single quadrupole) analysis combined with Agilent's Deconvolution Reporting Software tool. Mezcua et al.25 spiked 95 pesticides to eight vegetable and fruit samples, at levels between 0.02 and 0.10 mg/kg. The evaluation of Norli et al.26 involved a test set of 177 pesticides spiked in triplicate to 3 matrices at 0.02 and 0.1 mg/kg. The studies revealed that the detectability is, as expected, compound, matrix and concentration dependent and that, even in cases of repeated analysis of the same extract, inconsistencies occurred with respect to automated detection. In all studies mentioned, the data generated were too limited to derive a confidence level for detectability of the target analytes in a certain matrix (group). On the other hand, through analysis of real samples, the studies did show that, compared to the conventional methods, more pesticides could be found in less time, clearly demonstrating the potential of the approach. This has been encouraging enough to apply the methods as an extension to the established quantitative multi-methods because any additional toxicant automatically found can be considered worthwhile.

To summarize the above, systematic validation studies of the qualitative screening methods are still lacking. The limited availability of appropriate software and/or target compound libraries up to now might be one reason for this. Another reason might be that, in contrast to quantitative methods, validation procedures and criteria for qualitative chromatography-based methods were not very well addressed in guidance documents for residues/contaminant analysis. For veterinary drugs, EU directive 657/2002 states that screening methods should be validated and that the 'false compliant rate (false negatives) should be <5% at the level of interest',28 without providing much detail on how to establish this. Fortunately, beginning this year both the veterinary drug27 and the pesticide29 community in the EU came up with supplemental and updated guidance documents addressing this issue. Essentially, both documents prescribe that in an initial validation, at least 20 samples spiked at the anticipated screening reporting level (< MRL) need to be analysed and the target analyte(s) need to be detectable in at least 19 out of 20 samples (corresponding to the <5% false negative rate). The initial validation needs to be continuously supplemented by analysis of spiked samples during routine analysis and periodical re-assessment of the data needs to be performed to demonstrate validity based on the extended data set.

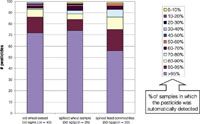

With the recent guidelines in mind, a first retrospective evaluation of automatic detection of pesticides and contaminants in feed commodities after GC×GC–TOF-MS analysis was done in the authors' laboratory. The evaluation was based on spiked samples concurrently analysed with routine samples over a period of 9 months. The results for 100 target compounds at the 0.05 mg/kg level are summarized in Figure 6. It shows that, at the software detection settings applied (which were not yet exhaustively optimized) >90% of the target compounds are detected with a confidence level >70%. In a retrospective validation exercise, for wheat the validation criterion was met for 70% of the analytes from the test set. Across different feed commodities, this percentage was substantially lower, although it should be mentioned that this type of application is one of the most challenging in food/feed toxicant analysis.

Figure 6: Reliability of automated detection of residues and contaminants in feed commodities analysed with GCÃGCâTOF-MS. Data processing and automatic detection: ChromaToF 4.1 + Excel macro.

Conclusions

Chromatography combined with advanced full scan mass spectrometry is developing into a universal tool for screening (and quantitative determination) of a wide variety of food toxicants. Time spent on sample preparation and the set-up of instrumental acquisition methods is declining. The opposite is true for data processing, optimization of software parameters for automated detection and finding the fit-for-purpose balance between false positives and false negatives; but progress is being made. The screening methods have already proven their added value. In the foreseeable future, such methods are likely to become more dominating, thereby enabling more efficient and effective control of food safety and providing more comprehensive, and more rapidly available, data for risk assessment.

Hans Mol is a senior scientist and heads the group of National Toxins and Pesticides at RIKILT. He has over 15 year's experience in residue and contaminant analysis in the food chain using chromatography with a range of mass spectrometric techniques. Paul Zomer (BSc) is a research chemist in chromatography with various mass spectrometric detection techniques, applied to pesticide residues and mycotoxins in feed and food matrices. Arjen Lommen is a senior scientist focusing on the development of data preprocessing and alignment software and adaption of this software for various applications ranging from metabolomics, profiling to targeted screening. Other areas of expertise include NMR. Henk van der Kamp (BSc) is a research chemist using gas chromatography, mainly focusing on GC×GC–TOF-MS analysis of food and feed with an emphasis on data evaluation and reporting. Martin van der Lee is a junior scientist with extensive experience in GC×GC–TOF-MS analysis of pesticides and environmental contaminants. His current work also focuses on elemental speciation analysis using chromatography with ICP-MS. Arjen Gerssen is finishing his PhD on the analysis of marine biotoxins. As well as his continued involvement in this area, his current research topics also include nano-LC–MS and the development and the application of software tools for data evaluation in chromatographic screening methods and data mining.

References

1. H.G.J. Mol et al., Anal. Bioanal. Chem., 389, 1715–1754 (2007).

2. P. Payá et al., Anal. Bioanal. Chem., 389, 1697–1714 (2007).

3. S.J. Lehotay et al., J. AOAC Int. , 88(2), 595–614 (2005).

4. M. Spanjer, P.M. Rensen and J.M. Scholten, Food Additives and Contaminants , 25(4), 472–489 (2008).

5. V. Vishwanath et al., Anal. Bioanal. Chem., 395, 1355–1372 (2009).

6. S. Bogialli and A. Di Corcia, Anal. Bioanal. Chem., 395(4), 947–966 (2009).

7. G. Stubbings and T. Bigwood, Anal. Chim. Acta. , 637(1–2), 68–78 (2009).

8. H.G.J. Mol et al., Anal. Chem., 80, 9450–9459 (2008).

9. E. Hoh and K. Mastovska, J. Chromatogr. A , 1186(1–2), 2–15 (2008)

10. National Institute of Standards and Technology, Automated Mass Spectral Deconvolution and Identification System (AMDIS). http://chemdata.nist.gov/mass-spc/amdis

11. H.J. Stan, J. Chromatogr. A, 892, 347–377 (2000).

12. K. Kadokami et al., J. Chromatogr. A , 1089, 219–226 (2005).

13. A. Robbat, J. AOAC int. , 91, 1467–1477 (2008).

14. W. Zhang, P. Wu and C. Li, Rapid Commun. Mass Spectrom. , 20, 1563–1568 (2006).

15. S. de Konig et al., J. Chromagr. A , 1008, 247–252 (2003).

16. P.L. Wylie, Screening for 926 pesticides and endocrine disruptors by GC/MS with Deconvolution Reporting Software and a new pesticide library , Agilent Application note 5989-5076EN (2006).

17. H.J. Cortes et al., J. Sep. Sci. , 32(5–6), 883–904 (2009).

18. L. Mondello et al., Anal. Bioanal. Chem., 389, 1755–1763 (2007).

19. M.K. van der Lee et al., J. Chromatogr. A , 1186, 325–339 (2008).

20. S.H. Patil et al., J. Chromatogr. A , 1217, 2056–2064 (2009)

21. K.M. Pierce et al., J. Chromatogr. A , 1184, 341–352 (2008).

22. M. Kellmann et al., J. Am. Soc. Mass Spectrom. , 20(8), 1464–1476 (2009).

23. A. Lommen, Anal. Chem. , 81(8), 3079–3086 (2009).

24. M. Mezcua et al., Anal. Chem. , 81(3), 913–929 (2009).

25. M. Mezcua et al., J. AOAC Int. , 92(6), 1790–1806 (2009).

26. H.R. Norli, A. Christiansen and B. Hole, J. Chromatogr. A, 1217, 2056–2064 (2010).

27. 2002/657/EC, Official Journal of the European Communities L 221/8-36, Commission decision of 12 August 2002 implementing Council Directive 96/23/EC concerning the performance of analytical methods and the interpretation of results.

28. Community Reference Laboratories Residues (CRLs), Guidelines for the validation of screening methods for residues of veterinary medicines (initial validation and transfer) , http://ec.europa.eu/food/food/chemicalsafety/residues/Guideline_Validation_Screening_en.pdf

29. SANCO/10684/2009, Method validation and quality control procedures for pesticides residues analysis in food and feed, http://ec.europa.eu/food/plant/protection/resources/qualcontrol_en.pdf

University of Tasmania Researchers Explore Haloacetic Acid Determiniation in Water with capLC–MS

April 29th 2025Haloacetic acid detection has become important when analyzing drinking and swimming pool water. University of Tasmania researchers have begun applying capillary liquid chromatography as a means of detecting these substances.

Analytical Challenges in Measuring Migration from Food Contact Materials

November 2nd 2015Food contact materials contain low molecular weight additives and processing aids which can migrate into foods leading to trace levels of contamination. Food safety is ensured through regulations, comprising compositional controls and migration limits, which present a significant analytical challenge to the food industry to ensure compliance and demonstrate due diligence. Of the various analytical approaches, LC-MS/MS has proved to be an essential tool in monitoring migration of target compounds into foods, and more sophisticated approaches such as LC-high resolution MS (Orbitrap) are being increasingly used for untargeted analysis to monitor non-intentionally added substances. This podcast will provide an overview to this area, illustrated with various applications showing current approaches being employed.

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)