- LCGC North America-07-01-2020

- Volume 37

- Issue 7

Are You Invalidating Out-of-Specification (OOS) Results into Compliance?

Are your OOS investigations scientifically sound and is the assignable cause correct? Or are OOS results invalidated using the ever-popular justification of analyst error?

Out-of-specification (OOS) results and their proper investigation is one of the current focus areas in data integrity inspections and audits. Are your OOS investigations scientifically sound and is the assignable cause correct? Or are OOS results invalidated using the ever-popular justification of analyst error?

Life moves on, and this is reflected in the shifting focus of data integrity issues during regulatory inspections. Much of the original focus during inspections was on shared-user identities, conflicts of interest with normal users granted administrator access to an application, unofficial testing, data deletion, time traveling, and not having audit trails or turning them off (1). Very recent warning letters show that these topics are still being found such as, for example, Tismore (2), where all analysts had administrator privileges, and there were deletion of data and aborted runs, and Shriram Institute, who were told by the Food and Drug Administration (FDA) in 2016 to turn chromatography data service (CDS) audit trails on, and only did this four days before the next inspection in 2019 (3). Better late than never? Not a chance!

However, the Shriram Institute warning letter also contains a citation for a current inspection focus, and the subject of this article, which is the investigation and invalidation of out-of-specification (OOS) results:

Your Quality Unit (QU) failed to ensure that your laboratory personnel follow written procedures. For example, our investigators observed at least <redacted> samples tested between March 2019 and September 2019 in which out-of-specification (OOS) results were not investigated as required in your procedures. Your head of Quality Assurance informed our investigator during the inspection that failures are investigated only upon customer request (3).

Note the highlighted text: Do the regulations state or imply you only investigate the OOS results you want? Not a chance! Unfortunately, there is not enough space to show the company response to the 483 observations and the huge amount of work that FDA required to remediate the problem, so please read the warning letter to understand why it is important to get OOS investigations right.

Lupin received a warning letter in 2017 (4), and the first citation focuses on OOS results and portions of the citation are

presented below.

1. Your firm failed to thoroughly investigate any unexplained discrepancy or failure of a batch or any of its components to meet any of its specifications, whether or not the batch has already been distributed (21 CFR 211.192).

Your firm frequently invalidated initial out-of-specification (OOS) laboratory results without an adequate investigation that addressed potential manufacturing causes.

A. Assay Failure

While conducting component release testing on <redacted> active pharmaceutical ingredient (API) batch (b)(4), your firm obtained a failing assay result of <redacted>% (specification range <redacted>%).

Despite the findings of multiple values close to the original OOS value, your firm invalidated the initial failing result, stating that the initial result “shall be considered an outlier and retest results shall be reported as final results.” Although the investigation failed to identify a conclusive laboratory root cause, you did not conduct an evaluation of your supplier, and reported an average result for batch release.

B. Content Uniformity Failure

Your investigation of content uniformity OOS results for <redacted> tablets for batch <redacted> was inadequate. Two individual units and the acceptance value (AV) were OOS for this batch, which was an exhibit batch filed in your <redacted>.

The initial assessment of the OOS results found no evidence of laboratory error by the analyst. Retests from stock solution and re-sonicated samples yielded results consistent with the original OOS results, and ruled out improper sonication and dilution error as root causes. Although the investigation did not demonstrate a conclusive assignable cause, you surmised that the “probable laboratory error” was inadequate cleaning of the <redacted> shaft by the analyst. You then invalidated the initial OOS results and reported test results from a new set of <redacted> units that passed specifications. Your firm’s investigation indicated that this “confirmed” that there was a laboratory error.

The problem in the quotations above from the warning letter is that there appears to be only a few examples of invalidation of OOS results at the company. However, you need to read the 483 Observation to see the magnitude of the OOS invalidation problem (5) that is shown in Table I. As 97% of stability OOS results were invalidated, this means that the company avoided sending the FDA field alerts that potentially could trigger a batch recall if an OOS result was confirmed. By the way, a different plant had a warning letter in 2019 and guess what? Invalidating OOS test results features in citation 1: … You frequently closed these OOS investigations without an assignable root cause, and released batches based on passing retest results (6).

Some companies never learn.

Method Development and Validation

Before we get into the depths of OOS investigations and the history of them, one of the potential causes of OOS results is method variability. As we discussed in an earlier article on analytical procedure lifecycle management (APLM), quality by design in method development is essential to finding and controlling critical parameters (7). A rushed development and validation can often result in an unreliable chromatographic procedure that through inherent variability can generate OOS results.

Sermon over, let’s get back to OOS investigations!

Déjà vu All Over Again!

Not a lot of people know this, but we have been here before with OOS. Nearly 30 years ago, a New Jersey generic pharmaceutical company, Barr Laboratories, had some analytical testing practices that are best described as unconventional. Apart from misplaced records, test data recorded on scrap paper, the release of products not meeting their specifications, inadequate investigation of failed products, and failure to validate test methods, Barr had a QC practice that if a test was out-of-specification, then they conducted two more tests and took the best two of the three to determine batch release. The FDA was less than impressed, and issued warning letters. Barr was less than impressed, and sued the FDA with the Agency reciprocating by suing Barr (only in America!). The two law suits resulted in a single court case presided over by Judge Alfred Wolin, whose knowledge of science could be written on a very small grain of rice. However, his judgment was remarkably prescient, and the ramifications still impact the industry today (8,9).

Key Laboratory Findings of the Wolin Judgment

From the judgment, there is an impartial and reasoned interpretation of the US

GMP regulations:

- Any OOS result requires a failure investigation to determine an assignable cause. The extent of the investigation depends on the nature and location of the error (laboratory or production).

- Wolin rejected Barr’s two out of three testing approach as unscientific, and at the same time also rejected the unreasonable FDA request that one OOS result should result in rejection of the whole product batch.

- Good science and judgment are needed for reasonable interpretation of GMPs. Industry practice cannot be relied on as the sole interpretation of GMP: guidance from literature, seminars, textbooks and reference books, and FDA letters to manufacturers are additional sources.

- Any GMP interpretation must be “reasonable and consistent with the spirit and intent of the cGMP regulations.”

- Outliers must not be rejected unless allowed by the United States Pharmacopoeia (USP) which resulted in the development and issue of USP <1010> on Outlier Testing (10).

GMP Regulatory Requirements

What are the GMP regulations that the FDA cited in the case of Barr Laboratories? These are found in 21 CFR 211.192 on production record review:

All drug product production and control records, including those for packaging and labelling, shall be reviewed and approved by the quality control unit to determine compliance with all established, approved written procedures before a batch is released or distributed.

Any unexplained discrepancy …. or the failure of a batch or any of its components to meet any of its specifications shall be thoroughly investigated, whether or not the batch has already been distributed.

The investigation shall extend to other batches of the same drug product and other drug products that may have been associated with the specific failure or discrepancy. A written record of the investigation shall be made and shall include the conclusions and follow-up (11).

Note the first word of the second paragraph: any. For “any,” read “all.” There is not a lucky dip selection of which OOS results you wish to investigate, just as there is no selection by a customer (3). You don’t have a choice. Therefore, if an unexplained discrepancy occurs, and an OOS is one, it MUST be investigated to find an assignable or root cause. If appropriate, the investigation should extend to other batches, or even other products. There also needs to be a formal report of the investigation together with corrective and preventative (CAPA) plans with monitoring to see their effectiveness.

A similar situation is found in EU GMP, where there are two requirements in Chapter 6 Quality Control on trending results and OOS investigations:

6.16. The results obtained should be recorded. Results of parameters identified as a quality attribute or as critical should be trended and checked to make sure that they are consistent with each other.

6.35. Out-of-specification or significant atypical trends should be investigated. Any confirmed out-of-specification result, or significant negative trend, affecting product batches released on the market should be reported to the relevant

competent authorities (12).

The EU regulations were updated relatively recently and require laboratories to trend their critical data and to investigate OOS results. Trending of results (both individual measurements and reportable results) provides a means of monitoring performance of the overall procedure and by implication the chromatographs used in the measurement of any analyte that we will discuss shortly. If there is a problem with released batches, then regulatory authorities must be informed.

How should industry interpret these regulations? Here we have the FDA to thank with two publications.

FDA Guidance for OOS Investigations

Following the Barr ruling, FDA quickly issued Inspection of Pharmaceutical Quality Control Laboratories in 1993 (13). Nearly half of this guidance was focused on how to inspect OOS investigations; this portion has been replaced by an FDA guidance on the subject that we will be discussing soon. However, before we do, the remainder of this 1993 guidance still is relevant today, as many processes in regulated laboratories have not changed substantially in nearly 30 years, and it contains advice on how FDA will inspect a QC laboratory and in consequence how QA can conduct internal audits. This should also be coupled with the FDA’s recent update of Compliance Policy Guide 7346.832 for Pre-Approval Inspections (PAIs) that also outlines data integrity focus of regulatory submissions (14) that was the subject of a recent “Focus on Quality” article (15).

The second FDA publication was a draft OOS guidance for industry in 1996, with a final version released in 2006 (16). This guidance describes the various stages of an OOS laboratory investigation for chemical results, including the roles and responsibilities of those involved. This document is important as it is the only formal OOS guidance issued by a major regulatory authority. The guidance outlines a three-part, two-phase strategy for investigating an OOS chemical analysis result, as shown in Figure 1.

- Phase 1 is the laboratory investigation which is to determine if there is an assignable cause for the analytical failure. This is conducted under the auspices of Quality Control, and should be split into two parts. First, the analyst checks their work to identify any gross errors that have occurred, and correct them with appropriate documentation. If this does not identify the cause, the analyst and their supervisor initiate the OOS investigation procedure looking in more detail and determining whether the cause is within the subject of the FDA OOS guidance. If a root cause cannot be identified, then the investigation is escalated to Phase 2.

- Phase 2 is under the control of Quality Assurance to coordinate the work of both production and the laboratory; there are two elements here: Phase 2a and 2b.

- In Phase 2a, if no assignable cause is found in the laboratory, then the investigation looks to see if there is a failure in production. If there is no root cause in production, then the investigation moves back to the laboratory.

- In phase 2b, different hypotheses are formulated to try and identify an assignable cause, and a protocol is generated before any laboratory work is undertaken. Here, resampling can be undertaken if required.

Owing to space, we will only consider Phase 1 laboratory investigations in this article.

OOS Definitions

We have been talking about OOS, but we have not defined this and any associated terms, so let us see what definitions we have. Now here is where it gets interesting. You would think that, in an FDA guidance focused on OOS investigations, the term would be defined early in the document. I mean, logic would dictate this, would it not? Not a chance! We must wait until page 10 to find the definition, and then it is found, not in the main body of text, but in a small font footnote! Your tax dollars at work. Not only that, it is totally separated from the discussion about the individual results from an analysis that is found floating in the middle of page 10. Your tax dollars at work, again. There are the following definitions used in the FDA OOS guidance document:

- Reportable Result: The term refers to a final analytical result. This result is appropriately defined in the written approved test method, and derived from one full execution of that method, starting from the sample. It is comparable to the specification to determine pass/fail of a test (16). This is easy to understand; it is a one-for-one comparison of the analytical result with the specification and the outcome is either pass or fail. Maybes are not allowed.

- Individual Result: To reduce variability, two or more aliquots are often analyzed with one or two injections each, and all the results are averaged to calculate the reportable result. It may be appropriate to specify in the test method that the average of these multiple assays is considered one test, and represents one reportable result. In this case, limits on acceptable variability among the individual assay results should be based on the known variability of the method, and should also be specified in the test methodology. A set of assay results not meeting these limits should not be used (16).

- Therefore, the individual results must have their own and larger limits, due to the variance associated with a single determination. This is an addition to the product specification limits for the reportable result discussed above. Note individual results are NOT compared with the product specification.

- Out-of-Specification (OOS) Result: A reportable result outside of specification or acceptance criteria limits. As we are dealing with specifications, OOS results can apply to test of raw materials, starting materials, active pharmaceutical ingredients and finished products, and in-process testing. However, if a system suitability test fails, this will not generate an OOS result, as the whole run would be invalidated; however, there needs to be an investigation into the failure (16).

- Out-of-Trend (OOT) Result: Not an out-of-specification result, but rather the result does not fit with the expected distribution of results. An alternative definition is a time dependent result which falls outside a prediction interval or fails a statistical process control criterion (17). This can include a single result outside of acceptance limits for a replicate result used to calculate a reportable result. If investigated, the same rules as for OOS investigations apply. Think not of regulatory burden but good analytical science here. Is it better to investigate and find the reason for an OOE result, or wait until you have an OOS result that might initiate a batch recall?

- Out of Expectation (OOE) Result: An atypical, aberrant, or anomalous result within a series of results obtained over a short period of time, but is still within the acceptable range specification.

OOS of Individual Values and Reportable Results

To understand the relationships between the reportable result and individual values, some examples are shown in Figure 1, courtesy of Chris Burgess. The upper and lower specification and individual limits for this procedure are shown in the horizontal lines. You’ll see that the individual limits are wider than the specification limits, as the variance of a single value is greater than a mean result. There are six examples shown in Figure 2, and, from the left to the right, we have the following:

- Close individual replicates and mean in the middle of the specification range-an ideal result!

- The individual results are closely spread, and, although one replicate is outside the specification limit, it is inside the individual limit, and therefore a good result.

- The individual values are relatively close, and all are within the individual limits, but the reportable result is out-of-specification.

- One of the individual results is outside of the individual result limit, which means that there an OOS result although the reportable result is within specification.

Examples 5 and 6 would be OOT or OOE results respectively, but are not OOS. Here, the variance of the individual results is wider than expected, and may indicate that there are problems with the procedure. It would therefore be prudent to investigate what are the reasons for this rather than ignore them. We will focus on OOS result only here.

Process Capability of an Analytical Procedure

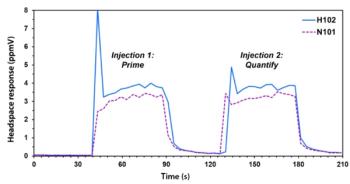

Do you know how well your analytical procedures perform? If not, why not? This information provides you with valuable evidence that you can use in OOS investigations, and it is also a regulatory requirement, as mentioned earlier (12). For chromatographic methods, Individual calculated values and results should be plotted over time, with the aim being to show how a specific method performs and the variability. There are two main types of plot that can be used:

- Shewhart plots with upper and lower specification and individual results plotted over time, as illustrated in Figure 2. Both the individual values and reportable results need to be plotted; if only the latter values are used, then the true performance can be missed, as the variance is lost when averaging. Over time, this gives the process capability over time.

- Cusum or cumulative sum is a control chart that is sensitive to identifying changes in the performance of method, often before OOS results are generated. When the plot direction alters, this often indicates that a change that influences the procedure has occurred, and the reason for this should be investigated to identify the reason. This may be as subtle as a new batch of a solvent or change of column.

If your analytical data are similar to Examples 5 and 6 in Figure 2, then you could have a non-robust analytical procedure that reflects poorly on method development and validation (7); either that, or you have poorly trained staff. Prevention of OOS results is better than the investigation of them!

This Never Happens in Your Laboratory…

Even with all the automation and computerization in the world, there is still the human factor to consider. Consider the following situation. The analytical balance is qualified and has been calibrated, the reference standard is within expiry, the weight taken is within limits, and the vessel is transferred to a volumetric flask. One of three things could happen:

- The material is transferred to the flask correctly, and the solution is made up to volume as required. All is well with the world.

- During transfer, some material is dropped outside the flask, but the analyst still dissolves the material and makes the solution up to volume.

- All material is transferred to the flask correctly, but the flask is overfilled past the meniscus.

At this point, only the analyst preparing the reference solution stands between your organization and a data integrity disaster. The analyst is the only person who knows that options 2 and 3 are wrong. What happens next depends on several factors:

- Corporate data integrity policies and training

- The open culture of the laboratory that allows an individual to admit their mistakes

- The honesty of the individual analyst

- Laboratory metrics; for example, Turn Around Time (TAT) targets that can influence the actions of individuals

- The attitude of the supervisor and laboratory management to such errors.

STOP! This is the correct and only action by the analyst. Document the mistake contemporaneously and repeat the work from a suitable point (in this case, repeat the weighing). It is simpler and easier to repeat now than investigate later.

But what actually happens depends on those factors described above. Preparation of reference standard is one area where the actions of an individual analyst can compromise the integrity of data generated for one or more analytical runs. If the mistake is ignored, the possible outcomes could be an out-of-specification result or the release of an under- or over-strength batch. In the subsequent investigation, unless the mistake is mentioned, it may not be possible to have an assignable cause.

What is the FDA’s View of Analyst Mistakes?

Hidden in the Responsibilities of the Analyst section in the FDA’s Guidance for Industry on Investigating OOS Results is the following statement (16):

If errors are obvious, such as the spilling of a sample solution or the incomplete transfer of a sample composite, the analyst should immediately document what happened.

Analysts should not knowingly continue an analysis they expect to invalidate at a later time for an assignable cause (i.e., analyses should not be completed for the sole purpose of seeing what results can be obtained when obvious errors are known).

The only ethical option open to an analyst is to stop the work, document the error, and repeat the work from a suitable point.

Do You Know Your Laboratory OOS Rate?

According to the FDA:

Laboratory error should be relatively rare. Frequent errors suggest a problem that might be due to inadequate training of analysts, poorly maintained or improperly calibrated equipment, or careless work (16).

This brings me to the first of two metrics: Do you know the percentage of OOS results across all tests that are performed in your laboratory? If not, why not?

Remember that there are three main groups of analytical procedure that can be used as release testing ranging from:

- Observation (including, but not limited to, appearance, color, and odor, for example), which is relatively simple to perform. If there is an OOS result, it is more likely to be a manufacturing issue than a laboratory one (such as particles in the sample or change in expected color). However, laboratory errors in analyses of this type will be extremely rare.

- Classical wet chemistry (including, but not limited to, melting point, titration, and loss on drying) involving a variety of analytical techniques often with manual data recording unless automated (autotitration). There is more likelihood of an error but many mistakes, such as transcription or calculation errors, should be identified and corrected during second person review.

- Instrumental analyses (including, but not limited to, identity, assay, potency, and impurity) using spectrometers and chromatographs for example. Here, the procedures can be more complex, and data analysis requires trained analysts.

As you move down this list, the likelihood of an OOS result increases with the complexity of analytical procedure and more human data interpretation that is involved. Hence the emphasis on instrumental methods, such as chromatography (1) and spectroscopy (18) in inspections.

SSTs Failure Does Not Require an OOS Investigation

Under analyst responsibilities, there is an FDA get out of jail free card for chromatographic runs where the SST injections fail to meet their predefined acceptance criteria:

Certain analytical methods have system suitability requirements, and systems not meeting these requirements should not be used. …. in chromatographic systems, reference standard solutions may be injected at intervals throughout chromatographic runs to measure drift, noise, and repeatability.

If reference standard responses indicate that the system is not functioning properly, all of the data collected during the suspect time period should be properly identified and should not be used. The cause of the malfunction should be identified and, if possible, corrected before a decision is made whether to use any data prior to the suspect period (16).

Here’s where technology can come to help. Some CDS applications allow users to define acceptance criteria for SST injections. If one or more of SST injections fail these criteria, then the run stops automatically. This saves the analyst from trying to determine where there are data that could be used from the run because if the run is stopped before samples are injected, then there are no sample data available. It is important that if this function is used, it must be validated to show that it works-specified in the user requirements specification (URS) and verified that it works in the user acceptance testing (UAT). There will also be corresponding entries in the instrument log book documenting the initial problem and the steps taken to resolve the issue (11,19,20).

What is an OOS Investigation?

An OOS investigation is triggered by an analyst when the reportable result is outside of the specification limits as shown in Figure 2. The analyst informs their supervisor and this should begin the laboratory investigation by following the laboratory OOS procedure. The responsibilities of both individuals is presented in Table II, which is copied from the FDA OOS guidance document. The FDA is very specific in listing the responsibilities of both the analyst who performs the analysis and the laboratory supervisor who will be conducting the investigation. The guidance document will be the source for a laboratory SOP that will detail what will be done for a laboratory OOS investigation.

According to the FDA:

To be meaningful, the investigation should be thorough, timely, unbiased, well-documented, and scientifically sound (16).

Note well the criteria for a laboratory investigation:

- Thorough: The whole of the analytical process must be considered for assignable causes of the error, as shown in Figure 3. The investigation needs to go into detail.

- Timely: The investigation should start quickly, but also be completed as quickly as possible, and compatible with being thorough and well-document. Investigations that are still open 30, 60, or more days after the original analysis will be subject to more regulatory scrutiny, with suggestions of inadequate staffing of the laboratory.

- Unbiased: Start the investigation with an open mind, rather than believe that all OOS results are the result of analyst error before starting.

- Well-documented: There is a range of interpretations here, from a single page uncontrolled checklist with most possible causes of an OOS, to a flow chart with questions completed as the supervisor and analyst work through the investigation.

- Scientifically sound: Provide a rational explanation for the OOS result that is based on evidence and not supposition. From this must come the root cause to invalidate the OOS result, and any plans to prevent a reoccurrence.

The investigation begins by checking the chromatographer’s knowledge of the analytical procedure, and that the right procedure was used. This is followed by ensuring the sampling was performed correctly, and that the right sample was analyzed, and so on throughout the analysis. Areas to check for potential errors and assignable causes are shown in Figure 4 and Table III, and has been derived from the FDA OOS guidance.

Don’t Do This in Your Laboratory!

The Lupin plant in Nagpur is the source of this example of how not to do undertake an OOS laboratory investigation, and this is quoted from the 483 form that was issued in January 2020 (21). Citation 2 is an observation for failing to follow the investigation SOP, but we will look at citation 1, where the details of inadequate laboratory investigations are documented. Some parts of the citation are heavily redacted, so this is a best attempt at reconstructing the investigation that was carried out:

- There was an OOS result from a dissolution test.

- The OOS was hypothesized as being due to an analyst transposing samples from different time points.

- The original sample solutions were remeasured, but the results appeared to be similar to the original.

- A comment was added to the record that is redacted in the 483 that appears to document an analyst comment that some of the solution was spilled, and that this would account for the discrepancy in results. This is a very convenient spillage.

- However, the comment was not made by the analyst who performed the original work. A second analyst was told by his supervisor that the first analyst had said he had made a mistake, and the second analyst documented this, as directed by the supervisor.

- There was no documentation or corroborating evidence provided to support this, or that an interview with the original analyst ever occurred.

- A substitution of a solution was made which, when retested, passed. Well, what a surprise!

- The original test results were invalidated, and the passing results used to release the product.

- QC and QA personnel signed off the investigation, even though they knew of the substitution of the solution and potential manipulation.

- The original analyst was unavailable during the inspection.

- No deviation or CAPA was instigated.

- No definitive root cause of the OOS result was ever determined.

Any wonder that a 483 observation was raised?

FDA Guidance on Quality Metrics

The second metric that is important in OOS investigations is a topic in the FDA Draft Guidance on Quality Metrics (22) that emphasizes the importance of correct OOS investigations. There are three metrics covering manufacturing and quality control, but there is only one metric for QC that is the percentage of invalidated OOS rate, defined as follows:

Invalidated Out-of-Specification (OOS) Rate (IOOSR) as an indicator of the operation of a laboratory. IOOSR = the number of OOS test results for lot release and long-term stability testing invalidated by the covered establishment due to an aberration of the measurement process divided by the total number of lot release and long-term stability OOS test results in the current

reporting timeframe (21).

What is important is that the rate covers not only batch release but also stability testing. The rationale for using the invalidated OOS rate can be seen in Table I and the corresponding 483 observations and warning letters (4,5). An aim is that FDA can conduct risk-based inspections, and, if a firm has low regulatory risk, they will be relying on these quality metrics to extend the time between inspections. Woe betide a firm who massages these metrics.

Outsourced Analysis?

If your organization outsources manufacturing and QC analysis, how should you monitor the work? From a QC perspective, any OOS result should be notified to your organization from the contract facility. You must have oversight of any laboratory investigation on your products or analysis to ensure that the FDA criteria of an investigation are met as outlined above. In addition, if FDA are interested in a metric of the percentage of invalidated OOS results, so are you. You should have these figures for your work, but also across the whole of the outsourced laboratory. Therefore, you should review all OOS investigations on your products, either via video conference or on site during any supplier audits. In words of that great data integrity expert, Ronald Reagan, trust but verify.

Summary

Scientifically sound OOS laboratory investigations are an essential part of ensuring data integrity. Outlined here are the key requirements for an OOS investigation to find and assignable or root cause so that a result could be invalidated. Note that the FDA and other regulatory authorities take a very keen interest in invalidated OOS results, especially where analyst error is cited continually as the cause of the OOS. Your laboratory should know the OOS rate as well as the percentage of OOS results invalidated.

Acknowledgment

I would like to thank Chris Burgess for permission to use Figure 2 in this article and for comments made in preparation of

this article.

References

- R.D. McDowall, Data Integrity and Data Governance: Practical Implementation in Regulated Laboratories. (Royal Society of Chemistry, Cambridge, United Kingdom, 2019).

- FDA Warning Letter Tismore Health and Wellness Pty Limited (Food and Drug Administration, Silver Spring, Maryland, 2019).

- FDA Warning Letter Shriram Institute for Industrial Research (Food and Drug Administration, Silver Spring, Maryland, 2020).

- FDA Warning Letter Lupin Ltd. (Food and Drug Administration, Silver Spring, Maryland, 2017).

- FDA 483 Observations: Lupin Ltd. (Food and Drug Administration, Silver Spring, Maryland, 2017).

- FDA Warning Letter Lupin Ltd. (Food and Drug Administration, Silver Spring, Maryland, 2019).

- R.D. McDowall, LCGC North Am. 38(4), 233–240 (2020).

- R.J. Davis, Judge Wolin’s Interpretation of Current Good Manufacturing Practice Issues Contained in the Court’s Ruling United States vs. Barr Laboratories in Development and Validation of Analytical Methods, C.L. Riley and T.W. Rosanske, Editors (Pergammon Press, Oxford, United Kingdon, 1996), p. 252.

- Barr Laboratories: “Court Decision Strengthens FDA’s Regulatory Power” (1993). Available from:

https://www.fda.gov/Drugs/DevelopmentApprovalProcess/Manufacturing/ucm212214.htm . - USP General Chapter <1010> Outlier Testing (United States Pharmacopoeia Convention Inc., Rockville, Maryland, 2012).

- 21 CFR 211 Current Good Manufacturing Practice for Finished Pharmaceutical Products (Food and Drug Administration, Silver Spring, Maryland, 2008).

- EudraLex - Volume 4 Good Manufacturing Practice (GMP) Guidelines, Chapter 6 Quality Control (European Commission, Brussels, Belgium, 2014).

- Inspection of Pharmaceutical Quality Control Laboratories (Food and Drug Administration, Rockville, Maryland, 1993).

- FDA Compliance Program Guide CPG 7346.832 Pre-Approval Inspections (Food and Drug Administration, Silver Spring, Maryland, 2019).

- R.D. McDowall, Spectroscopy34(12), 14–19 (2019).

- FDA Guidance for Industry Out-of-specification Results (Food and Drug Administration, Silver Spring, Maryland, 2006).

- C. Burgess, Personal Communication.

- P.A. Smith and R.D. McDowall, Spectroscopy 34(9), 22–28 (2019).

- EudraLex - Volume 4 Good Manufacturing Practice (GMP) Guidelines, Chapter 4 Documentation (European Commission, Brussels, Belgium, 2011).

- R.D. McDowall, Spectroscopy 32(12), 8–12 (2017).

- FDA 483 Observations Lupin Ltd. (Food and Drug Administration, Silver Spring, Maryland, 2019).

- FDA Guidance for Industry Submission of Quality Metrics Data, Revision 1 (Food and Drug Administration, Rockville, Maryland, 2016).

R.D. McDowall is the director of R.D. McDowall Limited in the UK. Direct correspondence to:

Articles in this issue

over 5 years ago

LCGC North America July 2020 Issue PDFover 5 years ago

Beat the Heat: Cold Injections in Gas Chromatographyover 5 years ago

Living the Virtual Life: Transitions in Teaching and ResearchNewsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.