Profiles in Practice Series: The High-Speed State of Information and Data Management By Michael P. Balogh

LCGC North America

Columnist Michael Balogh explores the topics of informatics and data management with this month's featured scientists.

Informatics and data management was an obvious and intuitive topic to include in last year's Conference on Small Molecule Science (www.CoSMoScience.org). Indeed, the favorable response of attendees made it clear that the topic needs to be included, in its various forms, each year.

Todd Neville is a Senior Technical Solutions Scientist at IBM Healthcare and Life Sciences (White Plains, New York). He is also a featured contributor to this month's column. Neville participated in one of the conference workshops where, he relates, a colleague's comment provided proof positive of data management's central role in industry. Remarking on changing industry trends, he said that until fairly recently, he would weigh a job candidate's training and experience in chemistry as the most important factor, but that now the foremost consideration is the candidate's IT expertise.

This Month's Featured Scientists

In his opening summary of a report, our other featured contributor, Michael Elliott of Atrium Research (Wilton, Connecticut), characterizes a related dilemma in scientific data management. He writes that, "During the period from 1998 to 2002, electronic records generated by laboratory instrumentation and techniques grew at an annual rate of 27% per year. During this same time period, the number of graduates with science degrees increased only by 3% per year" (1).

The demands of data management are fast outstripping our ability to meet them. High-resolution, mass-accurate data can issue from a modern mass spectrometer at a prodigious 1 GB/h. Moreover, we like these data. As discussed in an earlier column (2), they are generated not just by life science investigators but, increasingly, by industry for high-volume processes such as characterizing the presence of metabolites and their biotransformations. So enormous data files are here to stay. But the effects of increased data demand coupled with the torrential data outflow of our instruments can overwhelm even the most IT-savvy. After 180 days of operation, five mass spectrometers, each producing 24 GB of data per day, will present you with a need to store, retrieve, sort, and otherwise make sense of 21.6 terabytes (TB).

But before we consider the future consequence of data overload, we must first address a more immediate problem. That problem, as Neville tells it, is one of classic labor market economics: worker dislocation. Many of his clients tell him they reflexively resort to CD-based or DVD-based operations when they face high data volumes. In addition to being impractical, such a strategy effectively turns a highly qualified Ph.D. chemist into a librarian, resulting in a lamentable loss of scientific expertise. So as Neville avers, the battlefronts of the future are what happens after the data are collected; they are the necessities of integration, communication, and security. IBM offers consulting services that evaluate its clients' requirements vis-àis software applications and the ability of developers to satisfy those requirements. Thus, IBM can define storage and computational solutions tailored to its clients' needs.

Neville spends much time with his clients discussing their needs before he even attempts to offer solutions:

We usually step in after the client has adopted MassLynx or Excalibur to manipulate data. IBM can help define the requirements from the perspective of a scientist seeking a research goal and design solutions based on computing, storing, communicating, managing, archiving, and regulatory compliance. Typically, a great deal of my time is spent with the researcher. I define the lifecycle of the data generated so that I can create a system to manage it — that is, collect, compute, store, and archive it — most efficiently. Each of these elements is a separate chapter in the design of a solution. For example, computing entails 64-bit versus 32-bit chip design, parallel versus SMP applications, memory bandwidths, rate of data generation, user skill sets, file systems, Ethernet media versus Infiniband versus FibreChannel connections. Storage and archival involve considering the data lifecycle, hierarchical storage management versus current disk prices, logical and physical migration strategies, tape media, and various disk media [such as S-ATA, SCSI, and so forth].

A number of Elliott's observations from his report "Managing Scientific Data" (February 2005) are represented here. I highly recommend the report to those interested in informatics and regret that I can only highlight it in this column. Aside from drawing its conclusions from extensive data, the report discusses trends toward developing data standards, regulation and compliance, and integration and security issues. To his credit, Elliott takes the time to couch his commentary in an eminently readable, plain-English style, and includes definitions and tutorials.

His report describes the movement from paper to electronic laboratory notebooks (ELN) and clinical electronic data capture (EDC) systems as contributing significantly to the recent 27% proliferation in data. Usefully, the report defines the often confused terms "data management," "information management," "knowledge management, " and "content management." Data management is perhaps one of the most nonspecific terms in information technology. It describes everything from data analysis systems to laboratory information management systems (LIMS). Information management refers to the process and systems involved in acquiring, storing, organizing, searching, and retrieving data. It takes data from the "disparate and unorganized" to the "logical and organized." Information management consists of the processes and systems involved in the use, analysis, and exploitation of data. Knowledge management refers to the process of sharing and distributing information assets throughout an organization. Finally, content management describes the process of integrating asset management companywide.

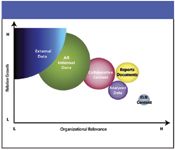

Figure 1: In drug discovery, data is growing faster than it can be turned into information. (Image courtesy of Atrium Research.)

The first question in any data scenario must address what we intend to do with the data we collect. Figure 1 depicts a simple scenario, an initial step toward understanding the process. Unlike e-mail, for instance, which imparts its message and thereafter serves little further purpose, the value of on-line data increases over time as the biological, pharmaceutical, and physicochemical measurements continue to amass within a data file. But this increase in value comes at the significant cost of ensuring the data's accessibility. Given the burgeoning nature of the data files, and the length of time over which they must be accessed, a solution might include some form of hierarchical storage management. Thus, some smaller percentage of the data are immediately accessible, or "active," while the remainder, in successive stages, are in-process or earmarked for long-term archiving.

Often, how the data are made available becomes a hurdle in itself. The complexity of files associated with a single injection varies widely: Bruker's NMR (Billerica, Massachusetts), Agilent Technologies' Chemstation (Wilmington, Delaware), and Waters' MassLynx have numerous associated files, one per injection. Yet close to the reverse is true with some other applications, like Applied Biosystems Analyst (Foster City, California), where a single file can include repeated injections, making attempts to unify the upstream output difficult if not impossible. Therefore, many users carefully evaluate a device based upon the relative accessibility of its control features and data output. As I reported in the April 2005 column, the accessibility consideration was of paramount concern to the high-speed synthesis operation designed by Neurogen (Branford, Connecticut). After considering all competitive applications, Neurogen decided to adopt MassLynx (Waters Corporation, Milford, Massachusetts) software, because of its inherent accessibility and high degree of interface compatibility with the company's Web-tracking and data management system (3).

When a device is integral to the operation, but only marginally compatible with the data-handling platform, a bit of surgery is indicated. Sierra Analytics (Modesto, California) performs such surgery. David Stranz, Sierra's co-founder and president, has served as the bioinformatics interest group organizer for the American Society for Mass Spectrometry (ASMS, Santa Fe, New Mexico) for the past three years. He recently joined the CoSMoS advisory board. From my discussions with him at the conference last August, it's clear that a number of factors must be examined in any fruitful discussion of data management and informatics in general.

Figure 2: Data lifecycle management by data classification. (Image courtesy of Atrium Research.)

The lack of an industry standard for data exchange impels Sierra's hybridization and customization service. Standards can be "de jour," in which case, oversight and change are decreed by a standing professional organization such as the American Society for Testing Materials (ASTM, West Conshohocken, Pennsylvania). Or they can be "de facto," in which case, they have been adopted almost universally (the case with Microsoft Windows). Finally, standards can be "mandated" by regulatory decree. Though the reasons behind standardization can vary, in every case, standardization implies cooperation between and among competitor companies. Unfortunately, in our industry, such cooperation has proved an elusive goal, and it's unlikely we'll see groundbreaking standardization like that which spawned the Musical Instrument Digital Interface (MIDI) in the 1970s. Nevertheless, some recent attempts to unify the variety of data outputs and make them amenable to common analysis has prompted a body of scientists to develop an open, generic version of Extensible Markup Language (XML) specifically for the various MS outputs. Called mzXML, this effort is intended exclusively for proteomic work (4). Nevertheless, security continues to be a leading concern when using XML-based platforms, and the Worldwide Web Consortium (W3C) has undertaken some initiatives in encryption and digital signatures (1).

Regulatory and compliance issues are important, even in areas traditionally outside of regulatory control. As this column reported in 2004 (5), recent years have seen a spirited initiative by a composite industry group to encourage the FDA to embrace risk-based practice for validation rather than the layered, prescribed regulation currently in place (6). The Atrium Research management report includes an extensive review of regulatory requirements, as does a comprehensive IBM Redbook publication, "Installation Qualification of IBM Systems and Storage for FDA Regulated Companies" (www.ibm.com/redbooks) (7), which provides various forms and a discussion of requirements from an industry perspective. Because computer validation and data storage applies to all parts of the regulated industry, a current reference work by Robert McDowall also should be of interest (8), especially those employing Empower and Millenium software platforms.

In recent years, providers of scientific instrumentation and services have focused on informatics, both in proteomics and small-molecule practice. Mergers and acquisitions, which combine the strengths of the companies they involve, are paving the way for major changes. Some of the pioneers have departed the scene or metamorphosed into different entities offering different goods and services, an effect similar to that displayed when many MS manufacturers merged in the 1990s (9). The Elliott report compiles a market space overview of the current companies based upon their ability to operate in the scientific data management arena. Predictably, major names such as Waters rank prominently when product performance is viewed in the context of its ability to execute in the scientific market space. But what might surprise you is the positioning of IBM alongside such companies. Yet the explanation is straightforward. Like the major companies in our industry, IBM has answered the challenge of efficiently managing vast and ever-increasing amounts of data. To this end, aside from addressing client needs through its consulting service, it has developed DiscoveryLink, a powerful integrated data application.

Thermo Electron Corporation (Waltham, Massachusetts) is another example of a well-established manufacturer made stronger through its mergers and acquisitions. A long-time maker of analytical instruments, Thermo began producing mass spectrometers when it acquired Finnigan. The company further evolved when it acquired Innaphase, which had established relationships with some of Thermo's competitors already. This created what might be described in marketing parlance as a homogeneous landscape. Thus, Thermo has maintained a visible if not a leadership position despite earlier attempts at comprehensive informatics with its now-defunct eRecordManager (eRM) data management product. It has done so through relationships and purchases such as Galactic (spectroscopy software development) and the development of Sequest, a leading life science library search engine.

Industry leaders have engaged fully encompassed capabilities. For example, after years of developing its Millennium and Empower applications, Waters adopted the Micromass-developed MassLynx data system. Waters then further enhanced its market position by acquiring two informatics providers: Creon and NuGenesis. In the following slot, Agilent entered into a 2004 partnership with Scientific Software Incorporated (SSI). Agilent relabeled the SSI CyberLAB products under the name Cerity ECM (CECM) and so divided the market landscape between itself and SSI. SSI, as SSI ECMS, is being sold by SSI for general content management, nonscientific use. Finally, EMC enjoys a commanding position in relation to enterprise-level documentation management, primarily in life sciences, for having acquired Documentum in 2003.

The world leader in data management services, IBM occupies a unique market position. Its DiscoveryLink software can integrate with numerous data management systems and can develop middleware "wrappers" to suit individual needs. Unfortunately, the impressive power of DiscoveryLink has gone unnoticed by some, an effect of competition with Oracle.

The consultancy concept addresses the needs of small-molecule scientists and pharmaceutical manufacturers, in addition to those pursuing life science endeavors. To satisfy data demands that increase exponentially, while acknowledging the disparity between current platform capabilities and data management needs, major corporations have invested in complementary technologies. The next few years promise to be one of the more interesting periods in recent analytical science. The pent-up demand for improved informatics will continue to ignite interest and creativity in our industry. The far-reaching changes it brings will be rivaled only, perhaps, by the early 1990s commercial development of atmospheric ionization and LC–MS itself.

References

(1) M. Elliott, Managing Scientific Data, The Data Lifecycle, 1st ed., (Atrium Research & Consulting, Wilton, Connecticut, 2005).

(2) M.P. Balogh, LCGC 23(2), 136–141 (2005).

(3) M.P. Balogh, LCGC 23(4), 376–383 (2005).

(4) P. Pedrioli, J. Eng, R. Hubley, M. Vogelzang, E. Deutsch, B. Raught, B. Pratt, E. Nilsson, R. Angeletti, R. Apweiler, K. Cheung, C. Costello, H. Hermjakob, S. Huang, R. Julian Jr., E. Kapp, M. McComb, S. Oliver, G. Omenn, N. Paton, R. Simpson, R. Smith, C. Taylor, W. Zhu, and R. Aebersold, Nature Biotechnol. 22, 1459–1466 (2004).

(5) M.P. Balogh, LCGC 22(9), 890–895 (2004).

(6) S. Bansal, T. Layloff, E. Bush, M. Hamilton, E. Hankinson, J. Landry, S. Lowes, N. Nasr, P. St. Jean, and V. Shah, AAPS PharmSci. Tech. 5(1) (2004).

(7) J. Bradburn, W. Drury, and M. Steele, IBM Redbook, 1st ed., (IBM, White Plains, New York, 2003).

(8) R.D. McDowall, RSC Chromatography Monographs (2005).

(9) M.P. Balogh, LCGC 16(2), 135–144 (1998).

Michael P. Balogh

"MS - The Practical Art" Editor Michael P. Balogh is principal scientist, LCâMS technology development, at Waters Corp. (Milford, Massachusetts); an adjunct professor and visiting scientist at Roger Williams University (Bristol, Rhode Island); and a member of LCGC's editorial advisory board.

Michael P. Balogh

New Method Explored for the Detection of CECs in Crops Irrigated with Contaminated Water

April 30th 2025This new study presents a validated QuEChERS–LC-MS/MS method for detecting eight persistent, mobile, and toxic substances in escarole, tomatoes, and tomato leaves irrigated with contaminated water.

Accelerating Monoclonal Antibody Quality Control: The Role of LC–MS in Upstream Bioprocessing

This study highlights the promising potential of LC–MS as a powerful tool for mAb quality control within the context of upstream processing.

University of Tasmania Researchers Explore Haloacetic Acid Determiniation in Water with capLC–MS

April 29th 2025Haloacetic acid detection has become important when analyzing drinking and swimming pool water. University of Tasmania researchers have begun applying capillary liquid chromatography as a means of detecting these substances.

Prioritizing Non-Target Screening in LC–HRMS Environmental Sample Analysis

April 28th 2025When analyzing samples using liquid chromatography–high-resolution mass spectrometry, there are various ways the processes can be improved. Researchers created new methods for prioritizing these strategies.

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)

.png&w=3840&q=75)