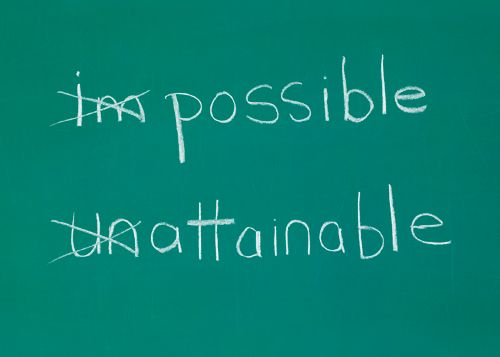

Are there any Holy Grails left in Chromatography?

Incognito’s search for the Holy Grail(s) of chromatography continues.

Photo Credit: JulNichols/Getty Images

Incognito’s search for the Holy Grail(s) of chromatography continues.

Well if my recent trip to the Analytica exhibition in Munich, Germany, is anything to go by, the answer is most certainly yes!

I attended to seek out solutions to some instrument and application problems, but as there were so many “solution providers” in attendance, I thought I’d push the envelope and see how close we are to solving some of the perennial problems that have dogged my analysis (and my career!) to this point. The number of times I was told “Ah well that’s the Holy Grail” was beyond co-incidental and the inspiration for writing this piece â just in case anyone else in the analytical community cares to join us on these Crusades.

Can I please have a software package that relates analyte structure to column chemistry? Not just the type of stationary phase (C18, cyano etc.) but which also considers specific selectivity descriptors related to bonding density, nature of the underlying silica, endcapping, or post bonding treatments, as proposed by Tanaka or Dolan et al. within the hydrophobic subtraction model.1,2 Interactions should include all primary selectivity determinands (typically thought to be hydrophobicity, shape, acidity, basicity, and ion exchange capacity of the phase) but I’d like to see something on the pi-pi interactions as well as some formal dipole-dipole measurement. Unless, of course, none of this really matters and the predictions can be made in an entirely different way using models (I’ve seen some that use neural networks for example) that I’m not yet familiar with. Like I said, this is a Crusade. The models need to directly relate analyte physico chemical properties (preferably from structures or SMILES strings for example), which are automatically calculated, and suggest a column or columns for the separation, citing the dominant interactions that governed this choice. The analyte properties used for column selection will vary but the obvious things such as LogP(D), polarity, and pKa of polar and ionogenic functional groups, hydrodynamic volume (shape) should be considered, as well as any other parameters of which I (we) are currently blissfully ignorant. Of course the column database must be absolutely up to date and all manufacturers must be represented. It would also be nice if hydrophilic interaction chromatography (HILIC) or mixed mode columns, where I suspect the modelling may become more complex, were also included. The Holy Grail described here is a reliable method of column selection that can improve our method development speed and efficiency. If you have such an application linked to a comprehensive database â then I’d be more than happy to hear from you!

I’d like comprehensive, independently collated, spectral libraries for liquid chromatographyâmass spectrometry (LCâMS). OK, you can stop shouting at the screen. I know and realize that this is the Lord Voldemort of Holy Grails (see any Wiki on the Harry Potter book series). The Grail whose name cannot be spoken, which is so far beyond our reach that even the mere mention of trying to achieve it is ridiculous. The number of variables associated with the atmospheric pressure ionization (API) technique is too large to even contemplate standardization. The nature of the eluent system, the capillary voltage, nebulizing gas flow, drying gas temperature and flow rate, and the nature of the interface design all add variability to the efficiency of the analyte gas phase production. In any case, the ionization produced tends to be “soft” and therefore produces fewer fragments useful for structural elucidation. It is possible, using up-front collision-induced dissociation (CID) with single analyzer instruments, or using a collision cell for MSâMS equipment, to isolate a suspected molecular or pseudomolecular ion or its adduct(s) and then induce fragmentation to produce more meaningful spectra, but differences in collision cell design, collision gas, applied voltages, and the gas pressure mean that there are just too many variables to control to produce meaningful, publically available libraries. These are the arguments that I often hear for not producing libraries. However, in the modern era, as we hurtle towards the generic in pretty much everything that we do, could we not standardize these variables enough to be able to make some cross platform comparisons, at least for different application areas? I’m yet to be convinced that we couldn’t develop a widely applicable library for pesticide analysis or plastics and additives, or metabolites or whatever application area, by fixing conditions enough to be able to draw comparisons. Look at how many methods in analytical science now use 0.1% TFA or 0.1% formic acid as the mobile phase. Fixing some eluent chemistry, interface, and collision cell parameters could generate spectra that are comparable between instrument platforms right? Or has the advent of accurate mass and MSâMS instrumentation in many laboratories negated any requirement for simple compound libraries. I think not, especially if you are on a budget.

Just while we are on the subject of mass spectrometry, I’d also like a reliable means of “cleaning” and correlating MS data from a variety of techniques. I’d like to be able to take spectra from various sources (electron ionization [EI] and CI coupled to gas chromatography [GC]âMS, electrospray ionization [ESI], and APCI LCâMS) and correlate the data to look for commonality and differences in the gas phase and condensed phase spectra (and hence the re-constructed chromatograms) generated by the various means of ionization. Which of the chromatographic peaks are related? Which are unique? Which do we see in only the condensed or gas phase? Can the combined MS information allow us to identify more analyte species or characterize target analytes more fully? Of course the answer to this last question is yes â however, the ability to do this in a more automated fashion doesn’t exist. I also suspect that the key to finding this particular Holy Grail may lie in being able to generate reliable and reproducible library spectra from our LCâMS data.

I posed the following question several times at Analytica and have yet to receive a satisfactory response: “If we had reliable de-convolution algorithms, why would we need 2D techniques?” Of course, for those of us without mass spectrometers, the answer is obvious. But if one were able to invest in a simple mass spectrometer with good deconvolution, rather than the complexity of 2D systems with their large and sometimes impenetrable data sets, would that be more appealing to a wider audience? The ion suppression and enhancement effects in API LCâMS and the quantitative and sometime qualitative variability that this can produce are often mentioned as the reason not to explore LCâMS deconvolution further. Can we not then add a marker ion or ions to help to normalize the instrument response for deconvolution purposes? It strikes me that we are not at the end of the road with this particular debate and that a more concerted drive towards better deconvolution could bring widespread benefits.

My final Crusade this year was for a high performance liquid chromatography (HPLC) system that truly recognizes the need to drive down system volumes. I believe that it is widely known that the ability to exploit modern, very highly efficient particle morphologies can only be truly achieved when we further reduce system volume. I also believe that this will mean using systems with no interconnecting tubing or fittings, where the modules are directly connected. With “light pipe” type flow cells I believe we have very low dispersion but high sensitivity flow cells, yet the other parts of the system we have not yet solved â especially the connection between the column and the injector or the detector. Whilst there are some systems that are heading in the right direction, I’m not seeing anything that really moves the needle in this area, enough to truly exploit, for example, the promise of sub-1-uïm particles.

So there we have my current Holy Grails. Of course I realize that my motivation lies in solving analytical problems, and that there has to be enough of a prize for vendors or researchers to undertake such work; whilst these prizes are significant for me, they may not be lucrative enough for anyone to set out on any of these Crusades. I’d love to hear about your own Holy Grails or suggestions of products that may help with those listed above; I will publicize these in a future column.

In his excellent mystery novel The Curious Incident of the Dog in the Night-Time, which features a 15-year-old “mathematician with some behavioural difficulties”, Mark Haddon writes, “Lots of things are mysteries. But that doesn't mean there isn't an answer to them. It's just that scientists haven't found the answer yet.”3 I wonder what drives the research and development in our field and who gets to define which of the mysteries to tackle next?

References

- Professor Tanaka et al., J. Chromatogr. Sci.27(12), 721â728 (1989).

- N.S. Wilson, M.D. Nelson, J.W. Dolan, L.R. Snyder, R.G. Wolcott, and P.W. Carr, J. Chromatogr. A961, 171â193 (2002).

- Mark Haddon, The Curious Incident of the Dog in the Night-Time (Jonathan Cape, London, UK, 2003).

A Final Word from Incognito—The Past, Present, and Future of Chromatography

February 10th 2022After 14 years in print, Incognito’s last article takes a look at what has changed over a career in chromatography, but it predominantly focuses on what the future might hold in terms of theory, technology, and working practices.

Sweating the Small Stuff—Are You Sure You Are Using Your Pipette Properly?

October 7th 2021Most analytical chemists believe their pipetting technique is infallible, but few of us are actually following all of the recommendations within the relevant guidance. Incognito investigates good pipetting practice and busts some of the urban myths behind what is probably the most widely used analytical tool.