- The Column-03-07-2017

- Volume 13

- Issue 4

Meeting Review: Automation and Data Handling For Diverse Separation Science Applications

A one-day symposium, organized by The Chromatographic Society, discussing practical advances in automation and data processing in modern science laboratories was held at Syngenta Research Laboratories in Bracknell, Berkshire, UK, on 25 October 2016.

Chris Bevan, The Chromatographic Society, UK

A one-day symposium, organized by The Chromatographic Society, discussing practical advances in automation and data processing in modern science laboratories was held at Syngenta Research Laboratories in Bracknell, Berkshire, UK, on 25 October 2016.

This one-day meeting at Syngenta, organized by The Chromatographic Society, focused on specific aspects of automation in the laboratory and extended the debate to the generation, collection, and interpretation of the data acquired by the application of automated analytical procedures. Experienced scientists from industries actively developing and applying automated processes described how their systems operate and how the very large quantities of data produced is handled to distil and deliver reliable knowledge and understanding. Analytical instrument companies exhibited and spoke about their contributions to this rapidly expanding area of analytical science.

The meeting took place at the prestigious Syngenta site and featured presentations and large attendance from their in-house scientists. Mike Duffin, a former senior technical specialist at Syngenta and now recently retired, welcomed the audience and set the scene for a most intriguing day of progress insights into today’s world of highly automated and data-rich analytical chemistry.

Mark Brittin, a principal research chemist in formulation and analytical development at Syngenta, presented the opening invited lecture entitled: “High Throughput Analysis of Pesticide Formulations at Jealott’s Hill”. Several years ago, Syngenta was presented with a unique analytical challenge: How do they chemically analyze several hundred uniquely formulated samples to determine active ingredient (AI) content when the AI can, in principle, be any AI from its company catalogue mixed with any coformulants at any concentration? This talk described how Syngenta tackled this problem by developing a rapid, low-cost process for assessing the chemical stability of liquid formulations prepared using a high-throughput robot.

Mark discussed the factors to consider when devising automated systems, which involves assessing: 1) how to move the samples around?; 2) how much of the sample to weigh out?; 3) what kind of vessels and what volumes of solution to use?; 4) how to make and record weighings?; 5) how to track samples; 6) how to remove insolubles?; 7) how to transfer solutions to the LC?; and 8) how to get the right results and send them on?

The main findings were that parallel processing is essential for high speed, and although robots are inherently slow they do run continuously. Robustness was highlighted as a critical issue and developers should always select the most demanding applications for testing. The importance of obtaining a depth of understanding for each operation and optimizing it was also stressed-lots of minor changes to a process can add up to a significant difference. The automated system discussed is now in routine use at Syngenta.

Syngenta’s Mark Earll, a senior analytical data scientist, followed his colleague’s talk to explain how to control and cope with the massive amounts of data produced by automated systems and report it succinctly in his talk: “Automated Analytical Reporting Using Open Source Software”.

Open source tools, such as KNIME workflow software and the R-language, provide an opportunity to automate the analysis and writeâup of analytical experiments. Data can be taken directly from instrumentation and automatic statistical quality control checks may be applied to the data to assist the analyst in judging the quality of the results. Statistical error estimates can also be provided on final results.

Mark discussed the general benefits of automation, the best ways to automate, and why automation requires a radical change in thinking. He then went on to explain why the key to successful automation is consistency of input and highlighted the importance of consistent names, headers, and formats, and why “pro-formas” are an effective way to work.

Mark also highlighted that computational tools can take chromatographers beyond reporting and can also be used to choose the best chiral column to use and predict the retention times for analytes in high performance liquid chromatography (HPLC) methods. He added that computational tools can help to characterize solvents and columns and with the design and analysis of interâlaboratory studies.

The main conclusions from this talk were that automation of analytical reporting using workflows improves consistency of results and removes tedium; KNIME is a very flexible data analysis and manipulation platform and has many advantages compared with copying and pasting spreadsheets; the combination of R and KNIME is very useful for containerizing R scripts and making them available for reâuse.

The importance of providing an audit trail of what you have done to the data when troubleshooting and validating your work was also highlighted, as well as the importance of data visualization to promote good quality control of the data.

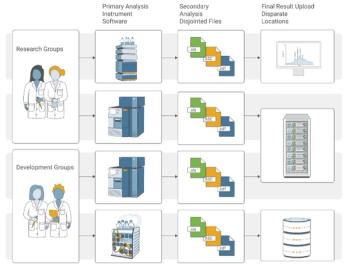

John Hollerton from GlaxoSmithKline gave a talk titled: “Experiences of Working with The Allotrope Foundation on Chromatographic Data.” The current software environment in the analytical community consists of a patchwork of incompatible software, proprietary, and non-standardized file formats, which is further complicated by incomplete, inconsistent, and potentially inaccurate metadata that results from an over reliance on manual text entry, according to Hollerton. This “pathology” leads to a wide array of downstream symptoms that affect the analytical community worldwide, he added.

The mission of The Allotrope Foundation is to address the root cause of this “disease”, instead of simply treating the symptoms through an array of helper applications, data conversion projects, and local standardization efforts of varying effectiveness. The Allotrope Foundation believes that the “cure” to the “disease” is the development of a comprehensive and innovative framework consisting of metadata dictionaries, data standards, and class libraries for managing analytical data throughout its lifecycle. To accomplish this mission, The Allotrope Foundation is working to create an open “ecosystem” through collaboration and consultation with vendors and the analytical community.

John explained the advantages of the standardization of data in terms of longâterm readability. What software will exist in 10-, 20-, 50-, 70-years’ time? Can that software read all formats created decades before? He also highlighted problems associated with data interchange and reproducibility and integrity.

Allotrope data have addressed these incompatibility issues and its performance is currently capable of handling large datasets. It is fast to interrogate, compact, well-documented, provides reference implementation through software toolkit, and has standard names. Much progress is being made and it is envisaged that we may soon see a universally adopted systems language.

Faye Turner from AstraZeneca spoke on the theme of drug stability testing with her presentation: “Automation to Support Accelerated Stability Studies and Shelf Life Prediction”.

Faye presented an overview of accelerated stability assessment program (ASAP)-the scientific basis of which is based on the Arrhenius equation modified to include the effect of humidity. Her conclusions were that using ASAP studies and automation could provide increased value to projects; linking simultaneous chemical and physical analysis allows more inâdepth data evaluation and interpretation; careful selection of accelerated storage conditions and time points allows robust shelf life predictions to be generated; and accelerated studies allow meaningful data to be generated in a much shorter time frame.

The meeting also included talks by companies who are active in the field of automation and data handling.

Klara Valko from Bio-Mimetic Chromatography Consultancy and representing InsilicoLynx Ltd. presented a talk on how physicochemical measurements and calculations based on chromatographic properties can assist in compound library selections. Her talk was titled: “Design of Drug Discovery Compound Libraries Using In Silico and High-Throughput Bio-Mimetic HPLC Data”.

Stuart Phillips of Shimadzu presented an account of his company’s insights on chromatography data systems in “Beyond the Chromatography Data System”.

Laura McGregor from Markes International reviewed novel twoâdimensional gas chromatography (GC×GC) software tools in her talk: “Chromatographic Space, The Final Frontier-A Review of Novel GC×GC Software Tools”.

Martin Radford from Mettler Toledo Ltd. took the audience back to fundamental roots with a detailed discourse on weighings: “Beneath The Surface of Accurate Analysis”.

Peter Bridge of VWR International Limited gave a lecture entitled: “Automation and Device/Software Communication”.

“Computer-Aided Column Selection, Screening, and Optimization for Real-World HPLC Method Development” was presented by Sophie Clark of Reckitt Benckiser in association with Crawford Scientific.

Peter Russell from ACD Labs presented: “An Example of Automating Chromatographic Workflows-Impurity Identification”.

Michael Lopez from Reaction Analytics Inc. described a project that enables results performed in an HPLC autosampler by the addition of heating and stirring at the vial tray to be shared and visualized.

Richard Davis from Anatune concluded the meeting with a presentation of the contributions that his company has been making to automated methods. He gave examples of these methods, in particular for the determination of phenols.

The lecture programme was very well supported by the vendor exhibition, which created a lot of interest for the delegates.

Chris Bevan closed the symposium, summed up the day’s events, and thanked Syngenta management for hosting the meeting. Syngenta in turn invited The Chromatographic Society back again soon for more presentations of high-quality science and technology.

The next Chromatographic Society meeting will be held at Pfizer in Sandwich, Kent, UK, on 15–17 May 2017.

Chris Bevan graduated in applied analytical chemistry under Arthur I. Vogel, specializing in nuclear and radiochemistry. This was followed by a doctorate in nuclear analytical chemistry for chemical oceanographic investigations carried out with the Ministry of Defence (MoD) and Ministry of Agriculture Fisheries and Food (MAFF) at The Nuclear Department, The Royal Naval College, in Greenwich, London, UK.

He started his working career in the pharmaceutical R&D industry as environmental and radiological protection officer for Hoechst pharmaceutical research laboratories. He then moved into developing novel analytical methods for drug discovery with Hoechst and later at GlaxoSmithKline (GSK).

He has been a long-time member and President of The Chromatographic Society, a scientific charity focused on promoting separation science. After retiring as head of physical chemistry at GSK, he continues to organize scientific conferences and symposia as the Society’s events coordinator.

Articles in this issue

almost 9 years ago

Dynamic Duoalmost 9 years ago

PharmaFluidics Raises 2.7 Million Euros for Fundingalmost 9 years ago

Identifying Greek Drug Consumption Using LC–MS/MSalmost 9 years ago

A Ferrari in Monacoalmost 9 years ago

Ultrafast Gas Chromatography: Key Considerations in Method Developmentalmost 9 years ago

ISPAC 2017 Previewalmost 9 years ago

Vol 13 No 4 The Column March 07, 2017 North American PDFalmost 9 years ago

Vol 13 No 4 The Column March 07, 2017 Europe and Asia PDFalmost 9 years ago

Janusz Pawliszyn Wins the Pittsburgh Analytical Chemistry AwardNewsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.