Chromatography in Real-World Applications: Current Trends in Environmental, Food, Forensic, and Pharmaceutical Analysis

During the past year, LCGC examined current trends in the application of liquid chromatography (LC), and gas chromatography (GC), and related techniques in environmental, food, forensics, and pharmaceutical analysis. This article presents some developments made by separation scientists working in these application areas and offers insights into the current trends in each field.

During the past year, LCGC examined current trends in the application of liquid chromatography (LC), and gas chromatography (GC), and related techniques in environmental, food, forensics, and pharmaceutical analysis. This article presents some developments made by separation scientists working in these application areas and offers insights into the current trends in each field.

Our interviews with separation science experts in specific application areas, such as environmental, food, forensics, and pharmaceutical analysis, have provided the LCGC audience with insights into what's going on in those fields. Here, we have excerpted several recent interviews that were published in our application-focused newsletters and our digital magazine, The Column.

ENVIRONMENTAL ANALYSIS

Detecting Pharmaceuticals in Water

LCGC recently spoke with Edward T. Furlong of the Methods Research and Development Program at the National Water Quality Laboratory with the U.S. Geological Survey (USGS) about his group's work on developing new methods to detect pharmaceutical contaminants in waterways.

What prompted you to develop a direct-injection high performance liquid chromatography (HPLC) tandem mass spectrometry (MS-MS) method to determine pharmaceuticals in filtered-water samples?

Furlong: For more than a decade the presence of pharmaceuticals and personal-care products (PPCPs) in the aquatic environment has been a topic of increasing interest to the public and to scientists. A simple search of the terms "antibiotics, drugs, or pharmaceuticals" in the six environmental science journals that have published the bulk of papers on this topic would have resulted in 18 to 25 papers total in any one year between 1998 and 2002; in 2002 that number increased to over 40, and in 2012 the number of publications in those six journals was over 350. We expect this publication trend to continue.

Concurrently, mass spectrometers have become more and more sensitive, so that triple-quadrupole instruments can now be routinely used to detect many small molecules, including most pharmaceuticals, at subpicogram amounts (on column). This level of performance suggested that a direct-injection HPLC–MS-MS method was possible at the nanogram-per-liter concentrations typically observed in natural aquatic environments.

Finally, as ecotoxicologists and other environmental scientists have studied the effects of exposure to single pharmaceuticals and pharmaceutical mixtures on fish and other aquatic life, both in the laboratory and in the field, significant sublethal effects, often behavioral in nature, have been demonstrated at the ambient parts-per-trillion concentrations that are routinely observed.

Thus, we saw that there was a compelling need to develop a single method that could comprehensively, sensitively, and specifically identify and quantify pharmaceuticals in environmental samples. In addition to sensitivity and specificity, we hoped to include the widest range of pharmaceuticals, particularly human-use pharmaceuticals that researchers at the USGS might expect to encounter in samples from across the range of water types and sources present in the United States. Our final list of analytes for the method was the result of our own understanding of prescribing trends and that information, our knowledge of the available scientific literature, and input and collaboration with our USGS colleagues, particularly the USGS's Toxic Substances Hydrology Program-Emerging Contaminants Project and the National Water Quality Assessment.

What kind of environmental impact do you expect this method to have?

Furlong: The method itself we hope is environmentally friendly, since it reduces consumables and disposal costs associated with sample preparation, along with the carbon footprint associated with sample collection and transport.

More broadly, I think the impact of having this method available to our USGS and other federal, state, and university collaborators will allow scientists to more comprehensively "map" the distributions, compositions, and concentrations of pharmaceuticals in US water resources. This method will become incorporated into national-scale monitoring, such as what has already been initiated by the USGS Toxics Program, and is now being incorporated into the USGS's National Water Quality Assessment Program and in more regionally or locally focused studies conducted by the USGS's state-based Water Science Centers.

The method also will have a major impact on the quality and depth of the applied research being conducted to elucidate the sources, fates, and ultimate effects of pharmaceuticals and other emerging contaminants, particularly that undertaken by the USGS Toxics Emerging Contaminants Project and its many collaborators. That project will apply the method at some key long-term research sites and in specific projects focused on providing more focused, hydrologically grounded understanding of the effects of these compounds on ecosystem and human health.

One such project where that has already occurred is a joint study conducted by USGS and the US EPA in which we have sampled source and treated waters for 25 municipal drinking water treatment plants (DWTPs) across the United States for pharmaceuticals and other compounds that the US EPA classifies as contaminants of emerging concern (CECs). This study, which is being prepared for publication, will provide insight into the compositions and concentrations of pharmaceuticals and many other CECs entering DWTPs and their subsequent removal or reduction during treatment.

The Long-Term Impact of Oil Spills

Chris Reddy from the Woods Hole Oceanographic Institution spoke to LCGC about the role of chromatography in the ongoing environmental analysis of the Deepwater Horizon oil spill and how comprehensive GC×GC works in practice.

Tell us about your group's involvement in the work at the Deepwater Horizon disaster site. What were the objectives?

Reddy: In April of 2010, the Deepwater Horizon (DWH) drilling rig exploded and released approximately 200 million gallons of crude oil along with large quantity of methane, ethane, and propane.

It was an ongoing spill for 87 days with oil residues that we continue to find along the Gulf beaches as recently as November 2013. We have studied — and continue to study — a wide range of research questions from determining the flow rate, analyzing how nature breaks down or "weathers" the oil, and fingerprinting it to confirm that oiled samples we have found have come from the DWH disaster.

Our field work has led us to collecting samples using a robot right where the oil was coming out of the pipe that you may have seen on TV at the time. We have walked many miles of the Gulf of Mexico coastline and even 300 or 400 miles away from the explosion. So we have gone from analyzing oil samples a foot away from the source of the spill to hundreds of miles away. I expect to be working at the site for the next 10 years, alongside some other oil spills and projects.

One of the main techniques you used was comprehensive two-dimensional gas chromatography (GC×GC). Why?

Reddy: My team has extensive experience in tackling some interesting research questions with GC×GC by studying numerous oil spills that have occurred as well as natural oil seeps. What makes GC×GC so powerful is that it has the capacity to resolve and detect many more compounds than with traditional analytical techniques, such as gas chromatography with mass spectrometry (GC–MS).

Now, I want to be very clear here. A lot of people hear me say GC×GC can do more than GC–MS, and they immediately assume that GC×GC is a replacement to GC–MS, but it is not. It is just another tool in the laboratory that allows us to address some specific questions where a regular benchtop GC–MS cannot.

On the other hand, for polycyclic aromatic hydrocarbons (PAHs), I don't think GC×GC can do any better than what a benchtop GC–MS can do and the GC–MS software is much, much more user-friendly. And so, in my lab, we don't quantify PAHs by GC×GC. There's no point. It's easier and faster to do so with a GC–MS.

One of the main factors that makes GC×GC very powerful, that I think a lot of people miss out on, is that when you look at a chromatogram in two-dimensional GC space you are not only just able to identify and measure compounds (many, many more compounds than with traditional techniques), but also can convert retention times in the first and second dimension to vapor pressure and water solubility.

If you're interested in the fate of oil there are two key questions you want to know: What is the vapor pressure of a compound, and what is the water solubility of the compound?

Now if we use some newly developed algorithms, we can allocate how much of a compound evaporated versus how much dissolved in water. That is the real major leap in my mind: GC×GC allows us to discover where the compounds are going. It's beyond just making your Excel spreadsheet bigger and identifying many more compounds. It allows us to say where is this compound going, or where has it gone.

FOOD ANALYSIS

Protecting the Consumer

The Column spoke to Stewart Reynolds, Senior Science Specialist at the Food and Environment Research Agency (FERA) and Head of the UK National Reference Laboratory for Pesticide Residues, about current challenges in food analysis.

What are the biggest challenges facing food laboratories at the moment?

Reynolds: One of the current challenges is how to analyze a larger number of analytes, such as pesticides or veterinary drugs, with shorter turnaround times, while also maintaining the analytical quality at an acceptable cost. In an attempt to achieve these improvements, the use of MS with non-targeted acquisition is being explored as complementary, or even as an alternative, to the commonly used targeted MS–MS techniques. These approaches will be subject to different performance criteria and will require expert training for successful implementation. In response to recent food scares, the long-term expectation is to screen a greater number of contaminants and characteristics of any food to prevent contaminated or noncompliant food from entering the food chain. As a consequence, future training programs will have to be updated and revised to reflect these developments.

Are there many regulations in the US and Europe that will have a direct impact on food analysis?

Reynolds: All regulations relating to contaminants in food have an impact on how we undertake the analysis of foods. Maximum levels or limits are set by many countries such as the USA, Russia, and Japan to control the movement of foods across international borders. Countries in Europe used to have their own particular regulations, but these have largely been harmonized as EU regulations. For example, all maximum residue levels (MRLs) for pesticide residues in food were harmonized for all EU member states under Regulation 396/2005, which came into force in September 2007. Where there was no acceptable registration information for a particular pesticide or commodity combination a default value of 0.01 mg/ kg was set. This meant that laboratories had to ensure that the methods they used were capable of routinely achieving this default level for all the pesticide or commodity combinations that they were asked to analyze. It also led to the adoption of a harmonized cost-effective method validation and quality control guidelines in the EU Document No. SANCO/12495/2011.

What is the most important trend in food analysis right now?

Reynolds: Within European laboratories there have been two major advances in recent years. The first has been the continual increase in the scope of multiresidue methods (both in terms of numbers of analytes and commodities). This means that more analyte or commodity combinations can be targeted in the same MRM and this is good in terms of food safety and reassuring consumers.

The second trend has been the improvement in the quality of data from laboratories throughout Europe as has been demonstrated by the continuous improvement in the laboratory performance in proficiency tests as organized annually by the EU reference laboratories. This has led to a greater mutual recognition of results and more effective trade between different member states.

SPME in Food Analysis

Nicholas H. Snow of Seton Hall University spoke to The Column about the role of solid-phase microextraction (SPME) in food analysis.

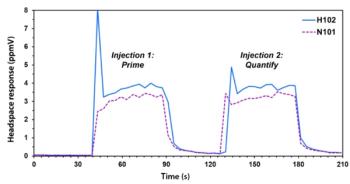

Is it difficult to apply SPME to food analysis?

Snow: The chief benefit and the main challenge with SPME is simplicity. SPME can be applied to trace analysis from a food or beverage matrix as long as the sample can be prepared (if necessary) into a form that allows the fiber to either be immersed or placed into the headspace. Then the challenges become the same as for any equilibrium-based (non-exhaustive) extraction method, including representative sampling: Is equilibrium achieved during the extraction, are the sample agitation and temperature programming reproducible, is an internal standard required for quantitation, how is the gas chromatographic injection optimized, and so forth. The chief benefits today, over other microextraction techniques, are 20 years of literature, including both theory and applications and systems.

FORENSICS

LC–MS Methods for Identifying Drugs of Abuse in Oral Fluids

Forensic toxicology screening methods to test for the presence of pharmaceuticals, drugs of abuse, and their metabolites have most commonly been carried out on urine or blood samples using immunoassays or gas chromatography coupled with mass spectrometry (GC–MS). However, these methods have various limitations that include risks of false positives and false negatives, lack of specificity, and limited throughput. As a result, in recent years, researcheres have been developing LC–MS-MS methods for drug screening. LC–MS-MS has a number of advantages over GC–MS for drug testing, in particular its suitability for analyzing a broad spectrum of compounds, including those which are difficult to analyze by GC–MS (for example, amines and semi-volatile compounds), without the need for lengthy sample preparation steps. And by introducing tandem mass spectrometry, the selectivity of the method is greatly enhanced, which also improves limits of detection and quantitation.

In recent years, there also has been growing interest in using oral fluid samples instead of urine for forensic toxicology. There are many advantages to collecting oral fluid samples compared with urine, including convenience and immediacy of sample collection, minimal chance of specimen adulteration, the lack of requirement for gender-specific staff for collection and observation, and reduced sample collection overheads. Oral fluid samples can also be easier to ship and store than urine, although the amount of specimen provided is usually much lower in volume. The quantities of compounds detected in oral samples typically provide a closer correlation to the drug dose ingested, and researchers have reported much higher prevalence of the parent drug in oral samples compared with urine.

Methods have recently been successfully developed for fast multiplex screening in oral fluid, for example screening 32 drugs including amphetamines, barbiturates, opiates, benzodiazepines, methadone, and cocaine in opioid-dependent patients; and for detecting opiates, amphetamines, MDMA, PCP, and barbiturates simultaneously. Vindenes et al. compared oral fluid and urine sample analyses taken from 45 patients being monitored for drug abuse and found similar results in both sample types for most drugs. The main differences were that amphetamines and heroin were more commonly detected in oral fluids, and cannabis and benzodiazepines were more commonly detected in urine samples. These findings are in line with previous studies and would be expected due to the basic versus acidic natures of these drug classes, respectively.

For the full article and references, please see A.M. Taylor, The Column 10(5), 11–14 (2014).

PHARMACEUTICAL ANALYSIS

LCGC spoke with a panel of experts about current and emerging trends in pharmaceutical analysis. Participants included Ann Van Schepdael, a professor at the KU Leuven in Belgium, Tom van Wijk, a senior scientist at Abbott Healthcare BV in Weesp, the Netherlands, and Harm Niederlander, a project leader at Synthon Biopharmaceuticals in Nijmegen, the Netherlands.

Has there been any significant adoption of LC–MS for routine pharmaceutical analyses?

Van Schepdael: Many monographs still use UV as a detection technique and LC–UV for assays and related substances. LC–UV equipment is affordable and robust, and the available column chemistries allow the analyst to play with the selectivity of the system. LC–MS may also be in use in the industry on a routine basis, but it appears less in pharmacopoeial texts. It seems that LC–MS is very important for the preparation of regulatory files for a new chemical entity (NCE): It is significant for the structural characterization of unknown impurities on the one hand, and for quantitation of the drug and its metabolites in biological samples on the other. This is because of its better sensitivity and very good selectivity. The study of a drug's pharmacokinetics (metabolite characterization, quantitation of excretion, kinetics of metabolism, and drug interactions) is quite well supported by LC–MS.

What are current areas of research in the analysis of traditional pharmaceuticals?

Van Wijk: In general, activities that support impurity profiling offer opportunities for improvement; for example, the improvement of method development strategies, orthogonality of methods and techniques, column selection, prediction of degradation pathways, and interaction with excipients. Analysis of polar components is of special interest as these show little retention in the classic LC–UV methods on C18 columns; investigation into the use of HILIC separation methods has grown in the last few years as a result. Control of potential genotoxic impurities is also an important area for research. In contrast to impurity profiling of regular impurities, which focuses on the detection of any unknowns above a specific threshold, the control of potential genotoxic impurities today fully relies on assessments. Although the impurity threshold for genotoxic impurities is much lower, technical capabilities allow screening for toxic impurities based on their intrinsic reactivity, in addition to the assessment. Although not required, these screening methods have already been developed for alkylation agents and a similar approach would allow other classes of toxic compounds to be screened for. With new EMA, USP, and ICH guidance on the horizon for heavy metals, there is a strong increase in work performed in this field, mainly using inductively coupled plasma–mass spectrometry (ICP–MS).

How are methods used for characterization and quality control of biopharmaceuticals different from those for small-molecule pharmaceuticals?

Niederlander: Typically, the "purity" of biopharmaceuticals extends beyond the level of identifying or quantifying components that are not the intended active ingredient. Biopharmaceuticals may consist of mixtures of iso-forms and slightly (differently) modified proteins that can all represent (some) activity. Therefore, profiling the composition of these mixtures is an important part of biopharmaceutical analysis in characterization and quality control. Parameters evaluated often include folding and association using spectroscopic techniques (circular dichroism, fluorescence); oxidation, deamidation, and N- and C-terminal heterogeneity using typtic peptide mapping; charge heterogeneity using cation-exchange chromatography or capillary isoelectric focusing (CIEF); and glycosylation using digestion or deglycosylation with reversed-phase LC, anion-exchange chromatography, or matrix-assisted laser desorption–ionization time-of-flight (MALDI-TOF), and receptor assays.

Following on from the fact that drug activity results from the combined effect of many individual contributions, at least one (overall) activity assay (often cell-based) is always included. Furthermore, the diversity of product- and process-related impurities is generally much wider for biopharmaceuticals than for small-molecule pharmaceuticals. As a result, the number of methods needed to cover all of these is generally much wider too. These include: Product-related impurities: Soluble aggregation is tested using size-exclusion chromatography (SEC); and cleavage, decomposition, or proteolysis is tested using SEC, SDS-page, or CE. Process-related impurities: Host cell proteins are tested using immunological techniques; DNA impurities are analyzed using real-time polymerase chain reaction (qPCR); and individual generally xenobiotic process additives are analyzed using immunological, chromatographic, or spectroscopic techniques. Other: Bioburden or virus-related testing is carried out using compendial techniques; and general parameters are also tested using compendial techniques. Please note that my focus here has primarily been on antibody biopharmaceuticals.

Trends in Chiral Chromatography

In recent interview, Professor Debby Mangelings of the Vrije Universiteit, Belgium, spoke to LCGC about chiral chromatography in pharmaceutical analysis.

What are the limitations of methods for chiral separation of pharmaceuticals?

Mangelings: A major problem with chiral separations is that enantioselectivity cannot be predicted. Several research groups have tried, but with limited success. This also implies that the development of a chiral separation method is often a trial-and-error approach. Therefore, our research focused on the development of generic chiral separation strategies, which help the analysts in chiral method development. In principle, these strategies should be applicable on any compound, independent of their structure. Our separation strategies consist of a screening step, where a limited number of experiments are performed on a chiral stationary phase with a broad and complementary enantioselectivity. The second step of such a strategy is to apply an optimization step when a (partial) separation is obtained and a second attempt with new conditions when it was not the case. Screenings are often also defined "in-house" in pharmaceutical companies.

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.